AI makes scientists more productive and less happy

“The most revolutionary thing one can do is always to proclaim loudly what is happening.”

- Rosa Luxemburg

As the art increases the artisan diminishes

-Quotation wrongly attributed to Adam Smith

Aidan Toner-Rodgers considers the effect of generative AI on a large R&D materials design lab with 1,018 materials researchers. Fortuitously, different researchers at the lab received access to the AI software at different points in time. This allowed for an experimental design through random assignment.

The AI software in question was: “Trained on the composition and characteristics of existing materials, the model generates “recipes” for novel compounds predicted to possess specified properties.” Although the technology is different, the underlying principle is not so different from that of LLMs. The machine is “trained” on a large database of [text OR images OR materials] and then outputs proposed new [text OR images OR materials[ using a deep learning neural network.

Did it work? Yes, exceptionally:

“AI-assisted scientists discover 44% more materials. These compounds possess superior properties, revealing that the model also improves quality. This influx of materials leads to a 39% increase in patent filings and, several months later, a 17% rise in product prototypes incorporating the new compounds. Accounting for input costs, the tool boosts R&D efficiency by 13-15%”

These are huge gains, but it is easier to miss the larger implications. One must not assess what this means by imagining a permanent, flat, increase in output in materials science. This technology, in materials science and outside, will continue to improve in both the range and power of applications. So often have I seen foolish attempts to guess what will come of AI by holding technology fixed- e.g. educators who assume that LLMs will always be unable to gather references, will always suffer from obvious stylistic tells (“delve”), etc. In the worst cases, not even today’s, but yesterday’s technologies are taken as the fixed point and used to divine probable outcomes. At a presentation, I saw one prominent AI researcher make a claim as to the intrinsic limitations of LLMs on the basis of a failure of ChatGPT 3.5- even as he spoke I plugged the question into GPT 4 and got the right answer. Generative AI for material sciences, science and intellectual output generally, was, is, and will be getting a lot better quickly.

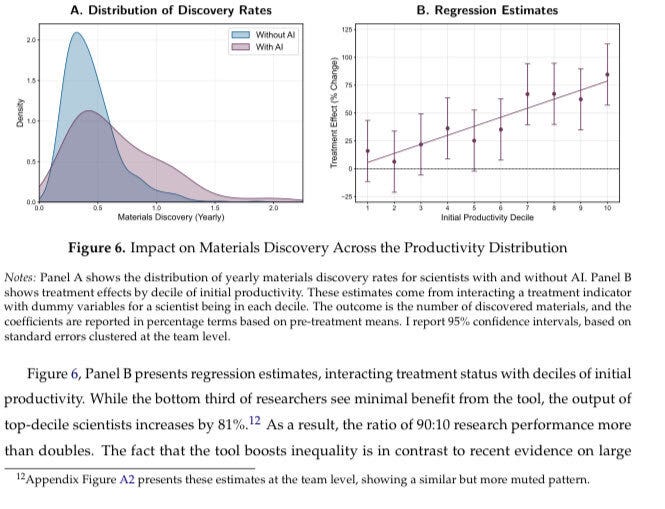

Some had hoped that AI would be an equalizer, making humans converge in output. The opposite is true- AI makes the very good very much better, but has little effect on the mediocre:

The top researchers became over 75% more productive. The bottom researchers became less than 25% more productive. Why? In essence, it seems to be because the more adept researchers were better at critically evaluating the output of the AI. One might hope that the weaker researchers would still make considerable gains by benefitting from the machine’s help in idea generation, as this would allow them to compete with better researchers possessing stronger native talents in creativity. However, any such effect was relatively weak. The differences in benefits from access to AI largely aligned with those who were already good at materials science: “Evaluation ability is positively correlated with initial productivity, explaining the widening inequality in scientists’ performance.”

One might hope that since higher-order skills like evaluation are being engaged more, these scientists would find greater enjoyment in their work. They didn’t. Idea generation was one of the more enjoyable aspects of their work. In using generative AI they got to spend far less time on it. The effect was huge: “In the absence of AI, researchers devote nearly half their time to conceptualizing potential materials. This falls to less than 16% after the tool’s introduction.”

As a result:

"Researchers experience a 44% reduction in satisfaction with the content of their work. This effect is fairly uniform across scientists, showing that even the “winners” from AI face costs. Respondents cite skill underutilization and reduced creativity as their top concerns, highlighting the difficulty 3of adapting to rapid technological progress. Moreover, these results challenge the view that AI will primarily automate tedious tasks, allowing humans to focus on more rewarding activities. While enjoyment from improved productivity partially offsets this negative effect, especially for high-ability scientists, 82% of researchers see an overall decline in wellbeing.”

Perhaps although AI increases the quantity of output, the output is not truly novel in the way a human scientist might be. On the contrary, the research output of the lab appeared to become more novel. However, let me express here some skepticism about measuring creativity in this domain, especially the long-tailed creativity that really matters. Here is what Toner-Rodgers has to say on the subject:

“I first measure the originality of new materials themselves, following the chemical similarity approach of De et al. (2016). Compared to existing compounds, model-generated materials have more distinct physical structures, suggesting that AI unlocks new parts of the design space. Second, I show that this leads to more creative inventions. Patents filed by treated scientists are more likely to introduce novel technical terms—a leading indicator of transformative technologies (Kalyani, 2024). Third, I find that AI changes the nature of products: it boosts the share of prototypes that represent new product lines rather than improvements to existing ones, engendering a shift toward more radical innovation (Acemoglu et al., 2022a).”

In other words, across three measures of creativity ( chemical similarity, new term use, and new product lines versus improvements to existing product lines) AI assisted researchers are more creative that ‘mere’ humanity. Again, I note that creativity is a difficult thing to measure, but the result is interesting at least.

Another experiment was conducted by Swanson et al. using a team of large language models aiming to create novel binding agents for SARS-CoV-2. The experiment used an increasingly common methodology. 1. create a “team” of large language models, 2. assign each a role, and 3. have them talk and plan among themselves. Roles assigned included a chemist agent, a computer scientist agent, and a critic agent. A human scientist was involved and gave high-level feedback. The machines also had access to tools including ESM, AlphaFold-Multimer, and Rosetta. The results?:

“Experimental validation of those designs reveals a range of functional nanobodies with promising binding profiles across SARS-CoV-2 variants. In particular, two new nanobodies exhibit improved binding to the recent JN.1 or KP.3 variants of SARS-CoV-2 while maintaining strong binding to the ancestral viral spike protein, suggesting exciting candidates for further investigation.”

I wish to warn the reader against what I call The Centaur presumption.

Centaur chess was a mode of chess in which a grandmaster and a powerful chess computer worked together to generate moves. The hope was that it would be superior to either a human or computer unaccompanied. Computers were superior tacticians, humans were superior long-term positional strategists. The centaur presumption was that human skills and computer skills would continue to be complements for a long time. The reasoning was simple enough- some strategic choices required projections 20-30 moves (30-60 ply) into the future- computers just couldn’t calculate that far! Human strategy- based on experience and intuition rather than calculation- would continue to matter.

It didn’t work for long because the sphere of what humans were better at rapidly shrunk, as anyone should have been able to guess. There is now essentially never a situation in which, if a human thinks they have a better move than a computer, you should trust them.

Now am I saying that this particular wave of AI technology will be able to replace materials scientists in every respect in, say, ten years? No, I am not, although it is a possibility. What I am saying is that boa-like, AI will restrict ever more the space in which a human is essential, or even makes a real contribution. For example, perhaps these models will gain skills in idea evaluation in addition to idea generation. Perhaps human scientists will, more and more, become mere lab technicians running experiments for computers. Exactly how fast this process will advance, I do not know.

Even less, we should never trust anyone who promises that the process will liberate human creativity. In some cases, it may, but your default hypothesis should be that anything that substitutes for human creativity will not promote human creativity. There will be exceptions, but your rebuttable assumption should be that AI will displace aspects of the creative process. None of this will occur in a benign society concerned with ensuring there are no losers, it will occur in a society run by anti-social alchemists dreaming of a process by which gold can be gold without an intermediate human. Nor is AI straightforwardly “just” a continuation of old automating technologies- there’s a huge difference between automating motion and automating a huge swathe of cognition, whose exact boundary, if any, is uncertain. The flexibility of human cognition is the reason automation has never yet rendered human labor less valuable when cognition itself is on the table, inducing from old patterns would be a mistake.

We cannot stumble into this, and we cannot trust in our welfare in circumstances in which human labor is of decreasing relevance. We need to open our eyes and organize.

Anyway, I spend many hours a week on this blog and do not charge for its content except through voluntary donations. If you want to help out, would you mind adding my Substack to your list of recommended Substacks? It’s the main way people get new Subscribers. A big thanks as always to my paid subscribers.

Our economy and meaning systems should not be co-reliant.

"The top researchers became over 75% more productive. The bottom researchers became less than 25% more productive."

This seems atypical to me. Looking at art and text, AI allows me (incapable of drawing a circle) to produce OK illustrations for my Substack posts. I doubt that it would do much for Michelangelo. On the other hand, it allows my illiterate students to produce what looks like proper text. The only use I have found is to do the same for dot points I input, and even then post-editing is needed to bring it up to scratch, put it in my own voice etc.

Summing up, in most domains, AI makes mediocrity easy, but does nothing for expertise. Looking at your example, I wonder how "top" has been defined. If you mean "top users of AI", then your results are true by definition. But there's no reason to think these were the top scientists before AI, or even after AI. Economics is only dubiously a science, but applications of AI/machine learning I've seen are mostly rubbish and not for fixable reasons like hallucination. Pattern matching can only get you so far