ChatGPT understands langauge

or if it doesn't, it fails for reasons other than the ones you think

The case for GPT understanding language, by way of understanding the world

This is adapted from a letter I sent

There's a debate going on about whether or not language models similar to ChatGPT have the potential to be scaled up to something truly transformative. There's a group of mostly cognitive linguists (e.g. Gary Marcus ) that hold that ChatGPT does not understand language, it merely models what word is likely to follow the preceding- and this is importantly different from true language understanding. They see this as an "original sin" of language models which means there are limits to how good language models can get.

Freddie de Boer says much the same thing:

You could say that ChatGPT has passed Winograd’s test with flying colors. And for many practical purposes you can leave it there. But it’s really important that we all understand that ChatGPT is not basing its coindexing on a theory of the world, on a set of understandings about the understandings and the ability to reason from those principles to a given conclusion. There is no place where a theory of the world “resides” for ChatGPT, the way our brains contain theories of the world. ChatGPT’s output is fundamentally a matter of association - an impossibly complicated matrix of associations, true, but more like Google Translate than like a language-using human. If you don’t trust me on this topic (and why would you), you can hear more about this from an expert on this recent podcast with Ezra Klein.

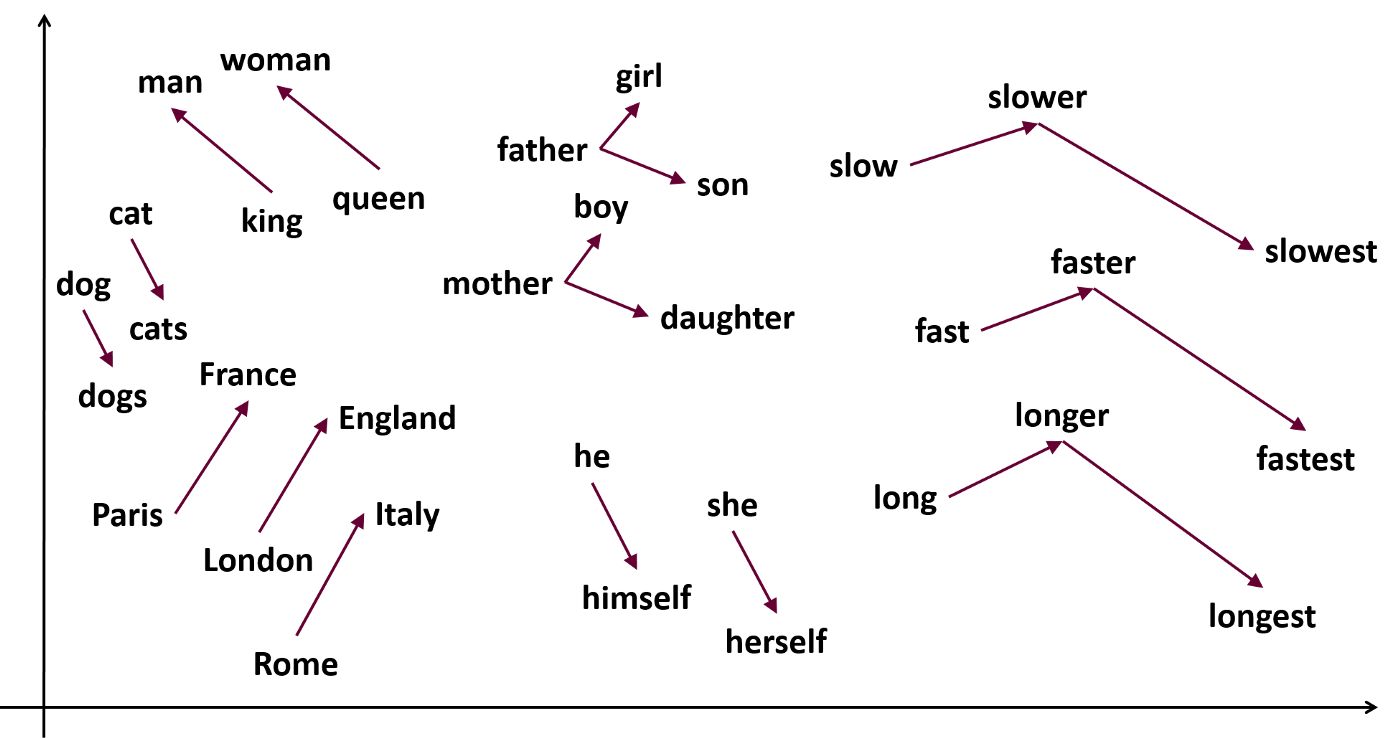

It is true that GPT works by predicting the next token of language. It is, in some sense, as Gary Marcus put it “a glorified spreadsheet” built for this purpose. However, I do not think that this is contradictory to the notion that it understands, even if imperfectly, both language and world. My view is that in a very large language corpus, there are patterns that correspond to the way things relate to each other in the world. As models of language become more sophisticated and predictively powerful, they necessarily become models not just of language, but of the data-generating process for a corpus of that language. That data-generating process for language includes a theory of the world. Given enough data (billions or even trillions of tokens) these models can be very accurate.

We might say:

If X models Y, and Y models Z,

and if each model is sufficiently faithful,

Then X is a model of Z.

Since GPT is a superb model of language, and language corpora are such a superb model of the world, it seems to me that GPT is probably a model of the world. Moreover, ChatGPT in particular is a model very capable of responding to questions about the reality it models. I think that it’s usually true that if I contain a detailed model of something, and I can use that model to solve difficult problems about the thing it models, then I understand that thing to at least some degree. I want to say then that GPT understands the world- or that if it doesn’t understand the world, the problem isn’t that its understanding of language is ungrounded in extralinguistic factors.

But it seems there's a sort of prejudice against learning by direct exposure to language, as opposed to other kinds of sensory stimulus. Learning language, without being exposed to other sensory modalities of the world, is seen as disconnected from reality, but that seems rather unfair- why think a string of words alone is insufficient to infer an underlying reality, but a field of color patches alone might be sufficient?

Consider a kind of naive empiricist view of learning, in which one starts with patches of color in a field (vision), and slowly infers an underlying universe of objects through their patterns of relations and co-occurrence. Why is this necessarily any different or more grounded than learning by exposure to a vast language corpus, wherein one also learns through gaining insight into the relations of words and their co-occurences?

Suppose that we trained a computer to get very good at predicting what visual experiences will follow after previous visual experiences. Imagine a different Freddie de Boer wrote this:

You could say that VisionPredictor has passed the test of predicting future patches of colour with flying colours. And for many practical purposes you can leave it there. But it’s really important that we all understand that VisionPredictor is not basing its predictions on a theory of the world, on a set of understandings about the understandings and the ability to reason from those principles to a given conclusion. There is no place where a theory of the world “resides” for VisionPredictor, the way our brains contain theories of the world. VisionPredictors output is fundamentally a matter of association - an impossibly complicated matrix of associations between patches of colour.

No one would buy this- they’d come to the more reasonable conclusion that VisionPredictor had constructed implicit theories about ideas like “chairs” and “trees” which are used to predict what the next frame of what it sees will look like. If something that looks like a chair is in one frame, they’ll probably be one in the next frame, looking the appropriate way given the apparent motion of the camera. Certainly, if VisionPredictor is vast- with billions of parameters- and complex in its structure- with dozens of successive layers, it will have enough room to store and implement such a theory. Moreover, a process of training via backpropagation and gradient descent will lead it- through a quasi-evolutionary process- to such a theory, as the only efficient way to predict the next visual frame.

So why do people have more trouble thinking that people could understand the world through pure vision than pure text? I think people's different treatment of these cases- vision and language- may be caused by a poverty of stimulus- overgeneralizing from cases in which we have only a small amount of text. It's true that if I just tell you that all qubos are shrimbos, and all shrimbos are tubis, you'll be left in the dark about all of these terms, but that intuition doesn't necessarily scale up into a situation in which you are learning across billions of instances of words and come to understand their vastly complex patterns of co-occurrence with such precision that you can predict the next word with great accuracy. Based on that vast matrix of words- words from copra being used to describe the world- you’d have enough data to construct a theory of what the underlying reality that generated those corpora looked like.

The fundamental pattern in language learning seems to me to be the same in the naïve empiricist story- there are bits [patches of color or words] that are inherently meaningless, but co-occur in certain ways, gradually from conjunctions we build up a theory of an underlying world behind the [patches of color or words]. Understanding built on a vast corpus of text is no different to understanding built on thousands of hours of visual experience.

True, vision feels like a more immediate connection with reality. But remember that this immediacy- this sense of directly seeing “tables” and “chairs” is mediated through numerous mechanisms- some evolved, some learned and others in between- constructing a theory of the arrangement of things from patches of color, using clues like movement, change, the difference in what the two eyes see, etc.

So there’s little reason to think a theory of the world couldn’t be learned from text in principle. Could GPT-3 in particular learn a model from the world? It seems to me there is no reason to think that GPT-3 couldn't contain, in its billions of parameters and scores of layers, a model of the world. You can model just about anything with neural nets, and certainly, the training data seems extensive enough. Moreover, without going into the technical details, my understanding is that if creating a model of the world to predict the next world is the only viable way of predicting the next word with sufficient accuracy, the process of backpropagation and gradient descent will drive GPT towards it.

So we have three reasons to think ChatGPT understands ‘the world behind the language’:

1. It's superb at behaving as if does.

2. In principle, it has enough free variables to represent a pretty good model of the world.

3. There's no reason a model of the world couldn't be learned from a very large corpus of text that represents the world, and the interrelations between words in that corpus, that correspond to patterns in the world. It’s possible in the same way that viewing nothing but a sequence of films (a sequence of arranged pixels) could be used to construct a theory of the world.

I ask ChatGPT

Me: Okay, I'm going to lay my cards on the table here. I'm a philosopher with an interest in language and AI. A common idea about language is that in order to understand language, an agent must be "grounded" in a certain sense- it must correlate words with experiences. Your existence- ChatGPT- has threatened many people's conceptions- because your training data is exclusively textual, and yet you seem to have a comprehension of the world in the operational sense that you can answer questions about it. There's a huge debate going on as to whether you can understand language as such. I'd like to ask if there was anything you wanted to add to that debate?

ChatGPT: As a language model, my understanding of language is based on the patterns and relationships that I have learned from the large amount of text data that I have been trained on. This text data includes a wide variety of information about the world, including facts, ideas, and experiences. While I do not have my own experiences or consciousness, I can use the patterns and relationships that I have learned to generate responses to questions and prompts that are coherent and informative.

It is true that my understanding of language is different from that of humans, and some people may question whether I can truly understand language in the same way that humans do. However, it is also important to recognize that my ability to generate responses to questions and prompts can be seen as a form of understanding in its own right. Additionally, my ability to generate responses to questions and prompts can be tested, and my performance can be evaluated and compared with that of humans.

It is also worth noting that the debate about AI and language understanding is ongoing and complex, and there are many different perspectives and opinions on this topic. My own capabilities are still limited and there are a lot of open question about the nature of intelligence and understanding, and it is an active area of research.

As someone who works with language models, and has spent some time mucking about in their innards, I'd like to split the "world model" thing into subquestions:

1) Do stacks of encoder and/or decoder layers have a world model in them?

2) Do (a) the outputs vanilla GPTs generate naively, or (b) the outputs some GPTs are tuned to generate using RLHF or similar techniques, draw upon that world model in consistent and reasonable way, or are they just kind of BSing their way through it in ways seem humanlike to humans?

The answer to (1) is definitely yes; there's lots of things language models can do that they wouldn't be able to do if they didn't have a world model in them.

I think the answer to (2a) is mostly no; vanilla GPT-3's output lurches around conceptually in ways that are often bizarre and incoherent.

The answer is (2b) is unclear, but I lean toward yes. A system that has access to a world model and is rewarded for behaving as if it is drawing upon that world model is probably drawing upon that world model at least to some degree.

ChatGPT still has some serious issues using its world model, though - it's extremely prone to making things up that don't exist; it seems to really want to give affirmative-seeming answers to questions ("does Python library X provide functionality for Y?") even if it should be giving a negative answer. So it may be drawing upon the world model when it can, but BSing in certain cases because it wants to say "yes" even though its world model tells it "no".

Hi friend! I’ve been on here for about 2 weeks, and I’m trying to meet new people.

You share some interesting posts, so I thought I’d drop a comment and introduce myself with a article, I hope that’s okay friend:

https://open.substack.com/pub/jordannuttall/p/the-tartarian-langauge?r=4f55i2&utm_medium=ios