Hydra

A small sketch of a vital problem, and a brief gesture at a possible solution

UBI forever?

In what follows, I’ll be talking about permanently sustaining a democratic government which is responsive to its peoples needs, even though they cannot sell their labor and do not own the means of production. But first we must note, even being in this situation- sustaining this state of affairs- is fortunate.

Sustaining, for example, “UBI” is a good problem to have, because it presupposes that you’ve won UBI to begin with. People get very lackadaisical about this! Many think that AI taking jobs wouldn’t be that bad because we’ll all just get UBI. I cannot emphasize to you enough that:

There is no law of nature that says UBI necessarily follows a drop, even a massive drop, in the demand for labour.

Even if there is a UBI, and even if on aggregate, society becomes fantastically richer, there is no guarantee that UBI will be anything but meagre. Consumption and income inequality might increase greatly, perhaps to astronomical levels.

You should try to get your guarantees upfront. Waiting to seek UBI and similar guarantees until after we no longer have any labor bargaining power would be a mistake.

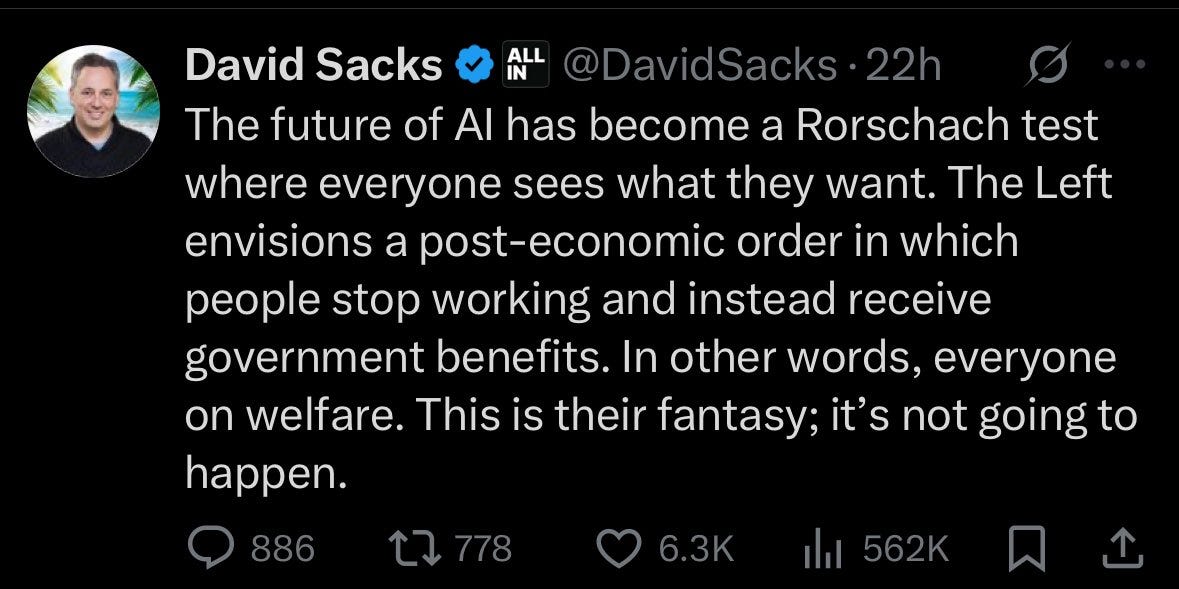

People are already lining up on the other side of this fight. Consider, for example, David Sacks. Never a pleasant consideration. There’s always been something transparently awful about him in a way that isn’t common to billionaires. Elon clearly believes in bigger things, even if they’re nuts. Ackman is earnest on some level, and we see it in his 3000+ word tweets. Bezos knows how to live it up a bit, and so doesn’t post much. Sacks, however, is just a ball of resentment for those weaker than himself: what I have previously called Yvne.

David Sacks is apparently Trump’s AI Czar. As with most X Czars, the reality disappoints the idea. Right now, the big AI issue seems to have moved from deepfakes and misinformation to job loss. For example, search volume for AI Job Loss has quadrupled in the last 12 months:

Perhaps this is why Sacks felt the need to address the peons now- to put a clear line against any policy of clemency- shut it down early so no one gets any ideas:

Conspicuously -suspiciously- missing in this statement is an explanation of what the right sees in this “Rorschach test”, at least the right according to Mr Sacks. If there really are no jobs for humans, but UBI or other payment mechanisms are a “fantasy” what are the proles to do? Or perhaps, what is to be done to the proles?

The most sympathetic interpretation of Sacks here is that he’s refusing to accept the premise of the hypothetical. He simply believes, myopically, that there will always be jobs for everyone. The less sympathetic interpretation is that he thinks anyone caught in this situation should starve to death, be rounded up and shot, or perhaps, in the very best case cared for as a voluntary philanthropic venture. Which reading is more accurate, the sympathetic or unsympathetic? Reader, I am unsure, but I have just about run out of sympathy.

So, post AGI-UBI is not guaranteed. Even at the start, powerful forces align against it. But at least in principle we know how to attain it: persuasion, strikes, electoral fronts, pressure of all sorts. However, supposing we get it, how do we make sure we keep it? That’s a less explored question.

How could we ensure that universal provision- and democratic control of the state continues forever in a post-AGI world? As we will discuss below, in a society in which humans have no labor power and no military power, this is much harder than in a contemporary democracy. Note that the kind of society we are talking about is quite different to our own- we are not just talking about ubiquitous drones or flying cars, we are talking about a world in which there are machines that can do every physical and cognitive task better than humans and cheaper. You might think this world is distant- perhaps it is or perhaps it isn’t- but I do think it will come about at some point hence it is worth thinking ahead to the problems it poses.

Three possible post-singularity futures

To set the scene, consider possible three futures.

This is not an exhaustive taxonomy of all post-singularity futures in which humans or post-humans remain in control. However, it does seem to me to contain the most likely options.

One or a few people own all wealth. No government can take any of the surplus. Unless we are extremely lucky (controlled by some rare, truly benign dictator(s)), this would be a catastrophe for most of humanity. At the very least, we will be put to the side.

Oligarchs own the wealth, but governments still exercise power over them and extract a surplus (this scenario varies substantially based on the quantum of surplus extracted, from de facto nationalisation to a small concession). In some ways this is not unlike our world. I suspect this option is unstable in a post-AGI state of affairs, but I may be wrong.

Since oligarchs no longer serve any useful role (even economic planning is done by AGI), the state seizes all their wealth.

I am interested in institutional control in cases 2 & 3. In case three, this will mean securing democratic control against politicians and civil servants. In case two it will mean securing democratic control against politicians, civil servants and oligarchs.

Hydra

Hobbes wondered about how unified power could arise from a condition without it, where it seemed, so to speak, uphill from the present circumstances. Leviathan (a commonwealth) is the desired goal, a vast, single-headed monster (the single head representing the sovereign) strong enough to prevent civil war.

I wonder about the opposite problem. In a world where humans will have effectively no labour power and no direct military power, how to prevent a handful of people, or even just one person, from seizing all control? In those circumstances, democracy seems uphill whereas autocracy seems, unfortunately, only an easy downhill stroll away.

Call the desired end goal, there Hydra- a vast and critically many-headed monster, one powerful enough that no Herakles will ever stand against her. The final, and absolute wall against autocracy in a world in which the natural defences against and limitations on autocracy no longer work.

To see the problem, compare a world in which human labor is worthless and humans have no military power, with how things are now. Now, we only live in a quite limited form of democracy, yet, if, say, the UK parliament voted that its current term would last forever- attempting an autogolpe- the public would start using hard power against parliament quickly. They would strike, sabotage, begin guerrilla campaigns, and urge their relatives and friends in the military to mutiny. We might summarise these weapons as follows- in the lonely hour of the final instance, ordinary people have three sources of hard power:

People can withhold, or maliciously apply, their labor power.

Ordinary people could, theoretically, wage guerrilla warfare against their government and possibly eventually conventional warfare if they continued to gain ground. In a broad sense, we include sabotage as a form of direct action here.

Ordinary people, albeit demographically and politically filtered, make up the military and their families. Hence, if the public gets really, really mad, there’s a good chance the military will join them.

None of these strategies would be possible in a world in which human labor was worthless, public order was ensured by robots more numerous and capable than any human resistance, and the army itself was automated.

I’m not saying hard power is the only kind of power. Power is a rich text. Mao Zedong may have said that power comes out of the barrel of a gun- and this is true in a sense, but ultimate power is ineliminably social- even hard power depends on our beliefs and attitudes. That squad shooting at you down the barrels of their guns all follow a leader- theoretically, they could all decide another squad member is the leader at any moment, but they don’t because culture doesn’t allow it. At base, hard power is itself made up of soft power- if power just came out of the barrel of a gun and didn’t come from culture, institutions, and social structure, the largest unit of power would be the single individual with a gun.

Still, hard power matters a lot. It is unclear that democracy, limited as it is, would survive in a world in which the public had no hard power. For this reason, AGI poses the Hydra problem- how do we prevent the rise of a unitary authority when a small number of people can control machines that can do everything you can. Existing controls on democracy are buffered by the fact that the anger of the people has real, material consequences even outside the political system- it can kill people and break economies. Taking existing democracy as a satisfactory model for resilient post-AGI democracy would likely be an error.

Sortition as the final control system

My proposal is to give final control over all military assets to a group of individuals who represent the people but are not part of the political class. But how do you get a group of democratic leaders without a background in the political class?

Sortition. A large(ish) council of individuals -perhaps 300 or so- with final power over all military assets, who are selected at random from the public, and swapped out regularly. Perhaps every month one-third of the assembly could be swapped out, ensuring they do not become a political class in themselves- total tenure would hence be 3 months per member.

The premise here is that this group, because it is large, representative of the public and not made up of the antisocial types that commonly run for office, will not try to seize power. That is, if one selects 300 ordinary people with a temporary mandate, one will not find among them 151 people so antisocial as to aim to end popular control and make themselves permanent tyrants.

Robots with any military or intelligence capacity (basically all intelligent models(?)) would be programmed to treat this group as sovereign. Most directly, that means following the orders of the sentinel group, but just as importantly, it means:

Protecting their physical safety, capacity to deliberate autonomously, ability to gain information about what is happening, and their capacity to give orders.

Interpreting their orders in a way that is compatible with the continued sovereignty of the control system.

Interpreting their orders in a way which is compatiable with the continued succession of members in the control system.

Refusing and reporting any orders from military officers etc. which would jeapordise the control system

Passing all of this on whenever new artificial minds are created.

Of course day to day command and management would presumably be handled by military officers, civil servants etc.- the purpose the Hydra group would be solely as loyalty sink and safeguard. That’s the rough outline, a lot more would need to be said about the details.

Get serious about valuing people and their autonomy

To summarise:

At the moment, much of what prevents the political class from permanently seizing power in an autogolpe has nothing to do with the fact that they’re elected and everything to do with the hard power factors listed above. It might help that they’re elected, but we shouldn’t rely on it. Purely constitutional barriers aren’t what’s doing the work either- the UK has almost none, and yet this parliament seems unlikely to vote for itself absolute power.

None of the protective hard power factors I listed will persist after the coming of AI that can do all jobs and fully automate war and policing.

Ergo, we must find some design mechanism that ties power directly to the people, not just through their representatives, post AGI. Normal safeguards are not sufficient, or at least we would be foolish to rely on them.

Unfortuantely this isn’t just a design problem; it’s a political problem as well. Implementing guards like those I have described will face fierce opposition. If a proper control system can be implemented, it will need social energies. For example, it might happen as one outcome of channelling the fury of a mass of workers laid off by AI and seeking guarantees that they will never face such insecurity again.

Why care about democracy? There are many reasons, but the main one is:

Because if democracy falls, and a small number of oligarchs or autocrats get to choose the version of ourselves that we send out into the lightcone, I do not trust them to send out a version of ourselves that captures what is uniquely valuable about our species.

We have no idea how bizarre or awful the untrammeled rule of one or a few might be. There has never been in all the history of the earth a society in which a single individual truly makes all the decisions, with no need to respect the will or wishes of ordinary people. Even corporations, almost perfectly dictatorial, still in practice have to build consent from their employees. A post-AGI oligarchy or dictatorship would be a terrible new experiment never tried in human history, far in excess of Joseph Stalin or Genghis Khan’s power. It might be much worse and more alien than you’d think. What we do know is that people who self-select for tilts at great economic, political, or even social power are very different from the norm, and in unflattering ways.

Within our own spaces, one initial step I would suggest as hygiene here is taking democracy seriously. If you are concerned with AI safety, wanting a big tent is natural, but I would argue that you should not make it so big as to include those who want to use technology to crush the democratic power of ordinary people.

If people interested in AI safety are a movement to ensure that the future is governed by humane values, we must recognise that human opposition to humane values exists. Indeed, sometimes it seems as if history is full of little else. Much as we revile people who cheer the replacement of humanity by machines, we really must revile those who regard substantial chunks of the population as a moral inconvenience at best, and at worst, a group it would be desirable to kill or contain forever. These people will often (usually?) just straight up tell you that they feel this way so there is no excuse for being naive to their existence.

I spend many hours a week on this blog and make it available for free. I would like to be able to spend even more time on it, but that takes money. Your paid subscription and help getting the word out would be greatly appreciated. A big thanks to my paid subscribers and those who share the blog around.

It seems to me to be a problem of being infected by longtermist philosophy that you have to justify solving these concerns as "sending the best version of ourselves into the light one." How about plain old fashioned liberalism?

Please consider applying for part of this bounty, offered on LessWrong:

https://www.lesswrong.com/posts/iuk3LQvbP7pqQw3Ki/usd500-bounty-for-engagement-on-asymmetric-ai-risk