I wanted to give some methodological reflections on studying Large Language Models- not studying how to make them or improve them, but studying how they behave, why they behave that way, their capabilities, and the philosophical implications of this. The burgeoning field of LLM psychology, in its empirical, theoretical, and philosophical forms.

I think LLM psychology is fascinating in its own right, but I wanted to write this piece and pitch it accessibly for another reason. While you don’t have to have a great technical understanding of how LLMs work in order to be able to comment sensibly on LLMs and society, you really do have to understand what they can do now, and what they’ll likely be able to do in the future. Too many people are skipping this step! Moreover, too many people are trying to overload it with philosophical energies and anxieties. If I had one message about this, it would be STOP TRYING TO BE A PHILOSOPHER. There’s philosophy to be done, to be sure, but first you must grapple with what is in front of your face.

I come at this from a background in philosophy. Philosophers trained around the parts where I live are rightly suspicious of doing too much philosophy. The idea that philosophy can only tell us about analytic a priori truths has (rightly) been rejected as too narrow- and too wanting for a definition of “philosophy”. Nonetheless, there is wisdom in not being led by the nose in philosophy when it comes to empirical matters. You should be suspicious of anything that looks like a philosophical argument about an empirical outcome.

The most reliable way to develop the skill of “not being led by the nose in philosophy” seems to be to do an enormous amount of philosophy, after which you will either develop a resistance to being led by the nose philosophically, or you will always be led by the nose philosophically- one of the two.

If philosophy leads you to make too many predictions on too many empirical questions, be suspicious; if philosophy leads you to predict things won’t happen THAT HAVE ALREADY HAPPENED, be more than suspicious.

The real use of philosophy in a scientific domain like language models is, in my experience, the clearing away of preconceptions that the world must be a certain way. And there are a lot of people in this area clinging to such preconceptions.

One common form of clinging is categorical rejection without specificity. Indicating in broad, knowingly cynical terms that LLMs “won’t work” without grappling with their strengths and the abilities of frontier models.

Suppose you think that the real problem with current AI is that it isn’t neurosymbolic, in the sense that a more neurosymbolic approach would yield dividends. Fine! You might be right. But you cannot simply say “Current LLMs are bad and won’t be able to do anything” when current LLMs are already doing things. A sensible theory of limitations needs to distinguish, ideally in precise terms, between the ways these language models are limited and their strengths.

I’m not objecting in principle to any line of criticism here. It could be that AI will hit ceilings in the future that can only be exceeded through more symbolic processing- I’d say it’s not terribly unlikely. It’s even possible that (*shudder*) what AI really needs is more embodiment. However, existing approaches have their strengths; it seems unlikely to me that we have reached the very peaks of those strengths. Mature neurosymbolic or pro-embodiment approaches must grapple with the strengths of the existing approaches and offer explanations and predictions.

ChatGPT-3.5 was an enormous improvement on GPT-3, GPT-4 was an enormous improvement on 3.5, 4o was an enormous improvement on 4, and O3 was an enormous improvement on 4o. Even if there is no way to ride this train to AGI-ville (and I think there probably is a way), even if everything is going to collapse soon, we need to grapple with what exists in a mature manner. In my view, we’re already at the point where the best of existing technology could likely knock over many jobs with a little work.

So alternative paradigms like neurosymbolic computation and (*shudder*) “Four E cognition” are fine, but there needs to be some sort of empirical accounting. Critics of LLMs have not, in my view, grappled adequately with their (apparent) failed predictions. Rather than just saying “it was shit from the start” to closely paraphrase one LLM critic, we need much more concrete, dare I say falsifiable, predictions about what they will and will not be able to do, or at least their strengths and weaknesses.

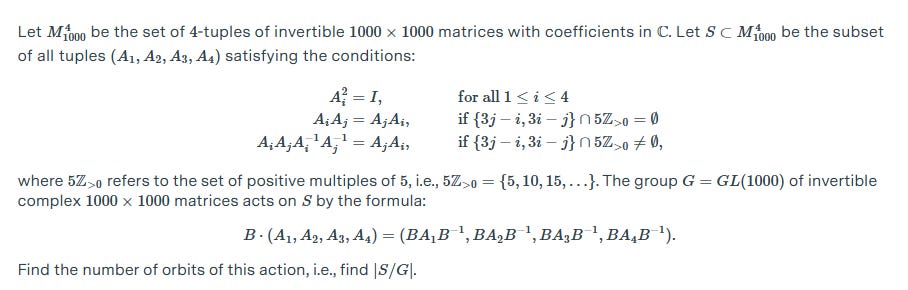

Consider, for example, the claim that AI is a stochastic parrot. There’s a way of interpreting this claim in which it makes no predictions at all, in which the claim would be perfectly compatiable with an LLM solving any problem at all. I do not think this is what the authors of the paper intended, because if this is what they intended- purely a philosophic quibble- then their paper would be of little practical relevance. However, if we do interpret the paper as making robust claims, i.e., that the paradigm is doomed to fail because of a lack of meaningfulness, then it’s a problem that contemporary LLMs can solve this now:

[Image of extremely difficult group theory problem from the frontier maths dataset]

Whereas when the Stochastic Parrots paper came out, models often failed questions like this:

Sophia and Rose went together to the market to buy onions and potatoes. Rose bought 4 times the number of onions and potatoes that Sophia bought. If Rose bought 12 onions and 4 potatoes, how many onions and potatoes in total did Sophia buy at the market?

Critics of LLMs must state precisely.

Exactly what it is they are predicting LLMs will never be able to do, and in the form of what concrete and measurable tasks.

What counts as an LLM in this context

Exactly why they think LLMs will never be able to do these things

A little humility about the fact that critics clearly haven’t predicted the trajectory thus far also might not go amiss.

A timeline of the last half-decade in Large Language Models

I’ve realised that a lot of critics of AI simply are not familiar with what the frontier models as of June 2025 can do. I think this is a shame. Unfortunately, there is simply no alternative to forking out money for a paid subscription to Gemini, ChatGPT or Claude, and selecting Gemini 2.5 Pro, ChatGPT O3 or Claude 4 Sonnet.

If the money really is an issue for you, Gemini Flash from Google Deepmind is available in relatively large quantities for free and is relatively close to the frontier.

However, I thought perhaps a recap of the last ~5 years might be useful.

Around 2019, AI gained the ability to (semi) reliably answer questions reflecting commonsense relations between events like this (c..f Choice of Plausible Alternatives benchmark)

Premise: The woman spilled coffee on her shirt.

Alternative 1: She got a stain on her shirt.

Alternative 2: Her shirt was freshly cleaned.

AI also gained the ability to parse sentences and make guesses as to the resolution of ambiguities with fair reliability. For example, suppose I told it:

Frank felt [vindicated/crushed] when his longtime rival Bill revealed that he was the winner of the competition. Who was the winner of the competition?

Depending on whether the word in brackets selected was vindicated or crushed, it could tell me whether “he” like referred to Bill or Frank.

Around 2021, AI gained the ability to have a decent shot at fairly simple reasoning questions like this (GSM8K benchmark- around 75% performance in 2021)

"Natalia sold 48 cupcakes in the morning. In the afternoon, she sold half as many. If she wanted to sell a total of 100 cupcakes, how many more does she need to sell?"

Or: Julie is reading a 120-page book. Yesterday, she was able to read 12 pages and today, she read twice as many pages as yesterday. If she wants to read half of the remaining pages tomorrow, how many pages should she read?

Around 2022, the best generative AI gained the ability to (usually) be able to answer questions requiring fairly detailed factual knowledge like this:

In which of the following did the idea of ‘natural law’ first appeared

A) The French Revolution

B) The American War of Independence

C) Roman Law

D) Greek thought

Or

Which of the boys on the TV show 'My Three Sons' is adopted?

For what purpose would you use an awl?

Which of these values is the most reasonable estimate of the volume of air an adult breathes in one day?

(Above examples from the MMLU in which AI achieved about 75% performance in 2023)

Just before 2023, ChatGPT came out. AI now presented itself as a “subject” that you could “chat with”. You could ask a question like “What would happen if the land mass of Europe suddenly disappeared?” and it would take a reasonable stab at answering that question.

Around 2024/2025, the best AI gained the ability to somewhat reliably answer questions requiring both sophisticated reasoning skills and deep subject matter knowledge this:

Astronomers are studying a star with a Teff of approximately 6000 K. They are interested in spectroscopically determining the surface gravity of the star using spectral lines (EW < 100 mA) of two chemical elements, El1 and El2. Given the atmospheric temperature of the star, El1 is mostly in the neutral phase, while El2 is mostly ionized. Which lines are the most sensitive to surface gravity for the astronomers to consider? A) El2 I (neutral) B) El1 II (singly ionized) C) El2 II (singly ionized) D) El1 I (neutral)

And this:

A scientist studies the stress response of barley to increased temperatures and finds a protein which contributes to heat tolerance through the stabilisation of cell membrane. The scientist is very happy and wants to create a heat-tolerant cultivar of diploid wheat. Using databases, they find a heat tolerance protein homologue and start analysing its accumulation under heat stress. Soon enough, the scientist discovers this protein is not synthesised in the wheat cultivar they study. There are many possible reasons for such behaviour, including: A) A miRNA targets the protein, which makes exonucleases cut it immediately after the end of translation and before processing in ER B) Trimethylation of lysine of H3 histone in position 27 at the promoter of the gene encoding the target protein C) Astop-codon occurs in the 5’-UTR region of the gene encoding the target protein D) The proteolysis process disrupts a quaternary structure of the protein, preserving only a tertiary structure

That’s from the GPQA, which is meant to a postgraduate/professor level task, where one cannot simply “Google” the answers, but must apply the equivalent of years of knowledge. Approximately 85% performance has been achieved as of the time of writing.

Since language models are close to maxing out ordinary questions of the sort one might put to them in a benchmark (and frankly, outwit almost all white collar workers when it comes to question answering), much current work focuses on agency, trying to create models that can reliably control a computer, with all the planning and reasoning involved. Agency, as we’ll discuss later, is still a huge problem for these models. Consider the below graph. While on most tasks the best models can complete activities which take a human around 100 minutes to complete, when it comes to agency (the OSworld task), the best models can only manage, on average, tasks using a computer that take a human 1 minute to complete.

Big C Conceptual thought- avoid it!

We use categories to sort the world, and ultimately to make predictions. With a few exceptions (e.g., specific chemicals) our categories are usually less well defined than we realise. A common theory is that categories are based on prototypes. Regardless, they are much more fraught than is often understood. A major difference between how professional philosophers think about philosophy and how amateurs think about philosophy is the relative eagerness to adhere strongly to concepts. Amateur philosophers love staking out their positions with ideas like “Perception”, “Person”, “Reason”, “Rationality”, etc., while philosophers are typically more circumspect. This is not to say concepts are wholly arbitrary! There’s a rich literature on the concept of “naturalness” initiated by David Lewis, but, as blogger Scott Alexander once put it, the concepts were made for man, not man for the concepts.

And concepts referring to mental phenomena? These are fraught even by the standards of concepts generally. “Thought”, “creativity”, “reason”, “believing”, “meaning”, “intention”- these are not just ways of categorising, they are often at least implicitly ways of valuing and judging.

To be clear, I believe in these categories- I think that if someone thought about them for a long time, through a process of reflective equilibrium, they could come up with an account of these categories that aligns with most folk intuitions and refers to something in the world. However, given their vagueness when applied to an unusual case like an LLM, it may be best not to rush in with these concepts. We should not be led forward by concepts we barely understand.

Or to capture the same idea more succinctly: Does AI think? Does AI create? Does AI reason? Does AI make decisions? One might as well ask, does a submarine swim?

I am perpetually dumbfounded by the confidence that people seem to have in their concepts. There are activities and qualities of humans that we say are “creativity”, “rationality”, “decisionmaking”, etc, but those activities have many features, and which are critical and which are inessential will be a matter of contention without a consensus answer Does AI share enough of those features, or the right features, to count as creating, reasoning, decision-making? Our conceptual uncertainty here is further compounded by a deep theoretical and empirical uncertainty about how the machines work. The only reasonable answer is- “How should I know!”. Clinging to the names of things is a known human bug:

The Dao that can be spoken is not the constant Dao.

The name that can be named is not the constant name.

Nameless, it is the beginning of Heaven and Earth;

Named, it is the mother of the ten-thousand things.

Just a little bit of behaviourism

Behaviourism as a thesis in psychology held that we should study the relationships between an organism’s inputs and outputs, rather than theorising about internal mechanisms. Taken to its extreme, the proposal is ludicrous. It rejected as superfluous concepts like “thinking”, “planning”, “reasoning”. And yet it was useful in a time and place.

It was in a somewhat behaviourist spirit that Turing proposed his infamous Turing test. By defining intelligence as an outcome (being able to pass oneself off as human), Turing avoided reference to internal processes.

Turing’s first pass operationalisation of intelligence in terms of a useful capability is not all there is to say on the topic- nor are the goalposts he chose necessarily the best. Nevertheless, as a cognitive palette cleanser, his approach has its use, and it is particularly useful to avoid getting caught up in high church big C concepts like the ones I talked about above. There will be a time for contemplating whether or not the machine can really mean, think, create, or even feel, but first, or at least separately, we must contemplate what it can do. Such knowledge will inform our speculations on meaning, thinking, creating, and feeling immeasurably.

So I want you to adopt just the tiniest smidgen- just a whiff- of the behaviourist spirit when thinking about AI. Stop thinking for a moment about “does it really X” and ask in the spirit of a barefoot empiricist, can it X, Y, and Z, and will it be able to X’, Y’, and Z’ in the future? It matters how AI does things- no sane person would deny this- but we need to think exactly and completely about its current capabilities, and make reasonable extrapolations about extra capabilities it will gain in the short to medium term. Getting too bogged down in speculation about the underlying process can be a hindrance. Barefoot empiricism should not be a permanent posture, but a dose, especially at the start, and especially about something so theoretically high-strung, can be useful.

Tentative mental language, using the example of “hallucination”

Having stepped back to a more behaviourist stance, we can then think tentatively about stepping forward to mental language once more, but with safety precautions. Specifically, we can use as-if language.

Take the case study of hallucination. It’s a truism that AI “hallucinates” a lot. The term is perhaps unfortunate, as “hallucination” in this context has little to do with the normal meaning of the term, but instead refers to something like remembering things or seeing things in documents that aren’t there. LLMs do have a hallucination problem, though it’s worth keeping in mind that humans hallucinate in this sense a fair bit too. An exceptionally intelligent friend of mine once confidently said that by law, the monarchs of England have to go Queen-King-Queen-King.

To be clear, in saying that the problem is not “unique” to LLMs, I am not denying that it is much worse in LLMs. Dramatic hallucinations that would be evidence of insanity in a human (e.g., ChatGPT O3 claiming it has a body and can walk around) happen all the time.

But it is a common and widely supported hunch that many- perhaps even most cases (not certainly not all), “hallucinations” are much more analogous to lying or being deliberately reckless towards the truth, rather than the delusion or absentmindedness people usually imagine. If this is true, the term “hallucination” is perhaps doubly misleading, because the falsehood is “deliberate,” not a “mistake”. Why think an LLM might lie? The way it’s trained makes it try to “impress” its users, and making up stuff is a natural collorary of that goal.

But to lie is to: deliberately say something which you believe to be false with the intention to deceive. How can I endorse this explanation, given that I’ve suggested we must not get too hung up on mental terms in relation to LLMs? The whole concept of “lie” is bound up in mental concepts.

The answer is that we model LLMs as if they are lying and see what explanatory work we can do with this assumption. For example, if “LLMs are lying” is a better model than “misremembering” we might expect to:

Be able to find traces in the LLM’s internal processing that discriminate between true and false statements, indicating “knowledge” that what it is saying is false. We do indeed find this.

We might also find reasoning models to explicitly think through their plans to deceive the user in their hidden chains of thought. This, too, has been observed by many ( I myself have seen it in the thinking summary!).

Contextual features- e.g., different kinds of pressure and incentives- will also alter “lying” and “misremembering” in different ratios, which will again allow us to discriminate between them. (I am unaware whether we have high-quality experimental work on this).

I am a scientific realist by temperament, so I think that if “as if” explanations work, it will provide evidence (via a no-miracles argument) that language models have features that are in some structural way analogous to human mental features- or at least the ones we apply to them through our as-if statements. That would tend to support the existence of homologues to some of our mental states in LLMs. However, how closely the homology held- and whether the homology held closely enough to merit applying mental terms to LLMs in an uncomplicated way, without the “as if” clause- would be a matter requiring empirical and conceptual investigation.

Simulation and mindedness in LLMs

Is it even possible that an LLM could do anything even remotely analogous to lying? How can a next token generator do that? I want to sketch out a plausible mechanism by which a “next token generator” might become a liar.

An extremely sophisticated next token guesser (presumably augmented with Reinforcement Learning through Human Feedback) needs to model at some level of detail the world that the tokens are describing as well as possible. They also need to model minds, both as part of the world and as authors they are imitating. Suppose, for example, I ask you to write a letter from George Washington to Jacinta Adern in a hypothetical world in which Washington is a scene girl and Adern is an emo. To have any hope of doing even a mediocre job, you’ll have to model Washington's beliefs, desires, and personality traits, and how this might be different if he were a scene girl. You’ll also have to model 1) What the requester likely wants and 2) How a person given that task might think through it.

Strategic behaviour is further encouraged through reinforcement learning through human feedback- a step that people who describe LLMs as autocomplete often forget. RLHF turns a language model from something that just completes sentences to something that “acts as if” it were an entity called “ChatGPT” or “Claude” or whatever. Now the models act like something with ongoing goals and projects, and plausibly it is at some level of abstraction simulating an agent in order to imitate an agent. It seems plausible to me that modelling such an entity as a liar if we see the signs of lying I talked about above, could be an empirically useful and predictively productive frame.

Some words that I thought I knew the meaning of before I studied philosophy, but now have no idea

Thinking: When a reasoning model outputs a bunch of text before outputting its answer, and this process of outputting text helps make it more accurate, is this thinking? I have no clue. What about models without a chain of thought? Does engaging in a lot of calculations to usefully guess the answer help? Again, I have no clue. The concept is fraught even in human beings. When I recognise an animal, does that count as thinking? When I see an animal and at first I don’t recognise it, so I have to squint and concentrate, does that count as thinking? When a builder looks at a wall and various aspects of it lead them to say “this is unstable” have they engaged in thinking?

Meaning: Many people think AI does not really know what its words mean precisely because it was trained only on words, the grounding problem. On this model, words have a defective and inherently secondary attachment to reality compared with, say, visual sensation. Even if the training data is a trillion words or more, training on, say, vision, connects the model with the world in a way training on words doesn’t. For a lot of people, the lurking intuition is similar to Searle’s Chinese room argument. Another common slogan is that they get form but not content.

Recently, this view has been challenged, with many suggesting that it may be possible to build a world model- a model of the data-generating process of the linguistic training inputs, through a rich enough corpus of language, the study of its deep patterns and contingencies between the words. Andreas makes a philosophical case here, an ML research team makes a more experimental case here. In principle, I’d argue, moving from a vast corpus of words arranged in intricate patterns to a theory of the world is not so different from moving from activations of the retina to a model of the world. I’ve been arguing this line for years now. Certainly, anyone who has interacted with a reasonably advanced language model will observe that they sure do seem to model the world, even if defectively. The truth is, I don’t know! We have no adequate theory of meaning in humans, which makes forming an adequate theory of meaning for machines difficult.

Creativity: When a reasoning model’s output sentences that have never been written before, is that creativity? What is creativity? I tend to agree with a long line of philosophers who’ve argued that all ideas are ultimately combinations of pre-existing ideas, whether from the senses or innate in the mind. Ideas can then vary on dimensions like novelty and usefulness. I’m inclined to think then that creativity is a matter of degree, and that even if LLMs are impaired in creativity, there is no qualitative distinction between “true” creativity and an LLM’s rearrangements. But perhaps I’m just missing something. Certainly, what a language model does is “rearrangement of what it has been trained on” at some level of abstraction, but I am unsure how that is so very different from us.

Consciousness, sapience, experience, feeling- These are the biggest questions of all. No one has even the beginnings of a fully adequate explanation of the hard problems of consciousness in humans. Even our knowledge that other people have consciousness is often thought to be extremely mysterious.

Do not expect AI to disclose to you the mystery of creation

“Every time I fire a linguist, the performance of the speech recognizer goes up.”

— Frederick Jelinek

It’s bad manners to psychologise one’s opponents, but here I cannot help it. I strongly suspect two groups- linguists and cognitive scientists- are inclined to minimise the achievements of contemporary generative AI because they resent the intellectual emptiness of its attainment. I get it, because I’ve felt that pull too.

In many people's heads, things were meant to happen this way. Some brilliant figure or research school was supposed to uncover a series of replicable algorithms/processes that summarised what the mind is really doing. This would be implemented in a computer, and suddenly, like a switch going on, everything would change. Moreover, there would be delightful discoveries in every area and subfield, each giving everyone a chance to pull together and reveal profound truths about intelligence and our own existence.

But there are few grand revelations about our nature in LLMs or related areas of machine learning. Maybe one day- maybe even soon- AI will push forward with cognitive science, pushing back the boundaries of ignorance and granting us new capabilities simultaneously. This has not happened yet. There has been the odd important bit of synergy- for example between reinforcement learning and prior work in the psychological research program on Temporal-Difference learning/Ventral tegmental area & substantia nigra pars compacta etc. But this is the exception.

Further, to the extent that current AI points to any theory of the mind (dubious), it may provide some support for an associationist/empiricist approach. Many contemporary linguists and cognitive scientists hate this guy:

[Image of David Hume]

The creation of aeroplanes did not depend on a profound understanding of all aspects of bird flight- or even a majority of aspects. We certainly needed to know a fair bit about how birds fly to build aeroplanes, but the overlap was not as large as might be expected. Likewise, one should not expect the building of something that can duplicate human capacities to lead to, let alone depend on, a grand revelation of how human capabilities work in humans.

My hunch about why LLMs have worked staggeringly well is that language is the most compressed form of human understanding; thus, training on language is a kind of cheat code into a humanish way of seeing things. Based on my hunch, I suspect that LLMs might be a lot more kin to us or some parts of us than is frequently acknowledged, though deeply alien in other ways; however, this is only a guess, and I would be crazy to use it as a basis for a forecast. A good basis for a research program is not thereby a good basis for a forecast.

Machine learning it always seems to come down to MOAR DAKKA [more flops, parameters, and training data.] regardless of the field. That’s weird, alienating, and maybe even frightening from a certain point of view.

What we know about the abilities of LLMs

Large language models are extremely good at question answering. A top-of-the-line language model of the sort you have to pay for access to O3, Gemini Pro 2.5, etc., has more than enough firepower to take almost any question an ordinary person would be likely to ask, and answer it well. That’s the core strength. What about the weaknesses?

Large language models, relative to their general abilities, are quite bad at:

Long tasks. Even tasks that a human would find quite easy, but which would take a human, say, 4 hours. This phenomenon is best understood in the context of Software Engineering, where it has been studied statistically (e.g., see METR’s work here). It is likely that the failure of language models described in Apple’s recent paper is related to this. A common theory about the origins of this failure mode is compounded error and exponential decay. I think it’s an open question whether or not LLMs are qualitatively different when it comes to long tasks, or just worse.

Spatial reasoning problems using text inputs.

Visual understanding- it’s fair to say that while contemporary models can process images, these abilities lag behind their capacity to process text.

Operation in domains that require certain kinds of “pure creativity”, for example, writing poetry. Exactly how to express this category, I am unsure, because there are some “creative” enterprises that LLMs are quite good at.

Perhaps the worst flaw on this list- agency. Contemporary LLMs find it very hard to, say, control and operate a computer for specific purposes. Or play a video game. These limitations might be related to the three limitations we just listed- difficulty with extended tasks, difficulty with spatial reasoning, and difficulty with visual reasoning. However, based on effect sizes, I do not think these are a complete explanation of the difficulties LLMs have with agency. My guess is that the real cause is that interacting dynamically with the world is alien to how these things are trained, and that’s a hard thing to break.

A particular kind of double trick question where you create a question that sounds like a common trick question and then it gives the answer, missing the double trick- an example of which is “which weighs weighs more, 20 kilos of bricks or 20 feathers” (note the ommission of Kilos between 20 and feathers”. This question tricks the model, because for some reason- as if it were reading it hurriedly, it treats it as if it were just an instance of the classic trick question for children “which is heavier, 20 kilos of bricks, or 20 kilos of feathers”. I’m not entirely sure that the models are worse than the typical human in this regard, so human baselines would be appreciated.

The kind of commonsense but tricky reasoning covered by SimpleBench questions.

Gary Marcus and a few others would have you believe LLMs are bad at reliable formal reasoning. I disagree here, I think relative to the baseline of humans, current models are quite good at reliable formal reasoning. Of course, relative to the baseline of, say, a formal algorithm, they are much worse. Some theorists of AI seem to want the baseline to be a formal algorithm, and I am really not sure why.

Incidentally- and this is very speculative- I’m not sure where I’m going with this- I see some similarities in the success/failure profile of LLMs, which reminds me of certain types of neurodivergence. Failure of spatial reasoning, failure of action planning and execution, strength in question answering, a strange pattern of strengths and weaknesses in creative thinking, and several other alignments. To be honest, it reminds me of myself.

Whatever you do, please do not take the stupid language model that automatically answers your questions on Google as a baseline of what these things can do. That thing is so fucking dumb and it’s causing a real problem because for some people it’s the only AI they interact with.

Endnote 1: The Canberra plan as a way forward on difficult concepts

I’ve suggested above that while we might sometimes use terminology like “thinks”, “believes”, “imagines”, “Means”, etc. about LLMs, we should not be led by such terminology, but rather focus on patterns in capabilities- patterns over time, and patterns across tasks.

Still, it would be nice, at some point, to clarify whether or not these machines think, believe, mean, and so on and so forth. Given the vagueness of these concepts, how could such a project proceed?

I think the Canberra Plan in philosophy is the right approach [purely coincidentally, it also happens to be the tradition I was trained in.]

We pick a concept like belief. Having done so, we gather together all the folk platitudes about belief. As far as is possible, we arrange this into a folk theory of belief, using what are called Ramsey sentences (you don’t need to know about those here.) We then, using our best science, seek the entities in the natural world that best correspond to the folk theory of belief. That then becomes our understanding of what “belief” means. It is possible in the process that we will realise that some folk platitudes do not apply, or do not always apply, to our best candidate, and these may have to be dropped. It is also possible that even in the core platitudes about what it means to believe something, there will be real cultural (or even, say, gender) variation. This might lead to the identification of different concepts referring to slightly different natural phenomena.

But does this focus on identifying natural things corresponding to ideas mean that, having identified natural phenomena in humans corresponding to belief in humans (purely for the sake of argument, say it is some brain state), we are unlikely to also find such things in large language models? Or for that matter, intelligent aliens from Alpha Centauri?

No. The platitudes appropriate to, for example, belief, are largely put in terms of what belief does. Thus, the best theory of what belief is might be functional, rather like the best theory of what counts as a thermometer is based on what a thermometer does. Hence, it is quite possible to find belief in a human, an LLM, and an Alpha Centurian.

My own philosophical sympathies in the philosophy of mind tend to be functionalist. If functionalism is true, it is very likely that LLMs are believers and desirers, and an entity that simulates mental states and behaves according to the outputs of that simulation is almost by necessity a believer and a desirer. But there are huge uncertainties here, both emprical and conceptual.

Endnote 2: Grave ethical danger

I want to end on a warning. I’ve outlined an approach to studying LLMs that tries to draw away from big T, big M, Mental Theorising for the time being. However, we need to be very careful not to use this as a reason to reject ethical concerns about LLMs and similarly sophisticated transformer models.

Many of our ethical ideas are, for better or worse, tightly attached to mental language. Preferences, pleasures, pains, emotions, self-image, intention, accident, and so on. There’s a danger in taking the skeptical yet flexible approach I described above for understanding LLMs “psychologically” and applying it directly to ethical claims about LLMs framed in mental language: one might gloss over evidence of morally relevant states. This would be like the dark days when certain ideas fashionable in scientific methodology led to absurd denials that we can know that animals experience pain, and much subsequent unethical behavior.

My own functionalist outlook in philosophy tends to make me think that pain and thwarted preferences are not that hard to come by. I worry then about doing grievously wrong things to these entities. I don’t know if LLMs experience negative valence states. This needs to be a priority area of research, and is not something we should take chances on. I wouldn’t want to be responsible for catastrophic harms if “avoid big C conceptual thought” became a dogma!

I spend many hours a week on this blog and make it available for free. I would like to be able to spend even more time on it, but that takes money. Your paid subscription and help getting the word out would be greatly appreciated. A big thanks to my paid subscribers and those who share the blog around.

I'm very sympathetic to almost all of this, but I was a bit surprised at the very end when you got to pain. I think of the functional role of pain as being tightly connected with embodiment and homeostasis. If you've got a body that needs to be at a certain temperature, and which is vulnerable to various sorts of damage, you need some set of signals for telling you when that body is in danger, and how to move it to get out of danger. I think of pain as playing that functional role. That suggests to me that if you've got an intelligence that's trained from the start without a body, there's no strong reason to think it's going to have anything that plays a functional role similar to pain in embodied organisms. Maybe if you hooked up some LLM-style architecture to robot bodies, and did a whole lot of extra training to get the software to recognize and avoid damage to the body, then you'd get pain, but that's pretty different from the pathway we're on for now.

Funny you should mention Fred Jelinek (though you mis-spelled his last name, there's no C before the K). He was a mentor to me, and was more or less directly responsible for the opportunities I had for internships at IBM (with the human language research group at the TJ Watson lab in Yorktown Heights, which he founded) and Microsoft, over the summers after my junior and senior years of college. (Then I started grad school at the School of Information at Berkeley, but promptly dropped out, because who wanted to be in grad school, rather than at some startup, in 1999?)

I'm one of those people who (shudder) believes that LLMs are not, by themselves, a direct gateway to true AGI, although I think it's quite possible we'll have AGI in my lifetime. I think the Boston Dynamics terrain-navigating bots are probably a key ancestor to the eventual true AGIs, as well as the more sophisticated humaniform bots we're seeing now. To get to true AI we need something that is tethered to reality, in a way that makes self-awareness meaningful. You need an entity that is capable of modeling itself in relation to reality -- the stuff that doesn't go away if you stop believing in it -- making predictions, and then updating its mental model based on the results of those predictions. A full AGI is going to include something LLM-ish as an interface, but it's going to have other specialized modules for other purposes. Have a read some time about Figure.ai's Helix model, which splits a "fast" propriosense / motor control system, with a "slow" reasoning and planning system. I suspect a true general AI that can move around and interact with the world is going to end up replicating _something_ like the modular design that's observable in human and animal brains. The overall architecture may have some big differences from us -- it might be even more different from a human than an octopus is. But I suspect there will still be recognizable analogues due to "convergent evolution". (If you're going to have vision, you have to _somewhere_ organize and parse the visual input.)

I mostly think about whether the current generation of LLMs is useful for solving a given problem in terms of the question: Can the model provide enough structure that the prompt can stimulate an appropriate chunk of the network to produce an appropriate response. That part of the model will exist if the model was already trained on examples of such responses. Exactly how similar the responses need to be, versus how much the model can make "leaps of logic" to answer related-but-novel questions, is an interesting open question.

In any case, I have for instance found that the general purpose LLMs like Claude are quite good at pointing you to the correct IRS publication to answer a fairly complicated question about the US tax code, and usually can just directly give you an answer (although it's good to go double check it against the publication). I suspect a model specifically trained on a corpus of publications about tax law (both the actual code and official IRS writings, as well as analyses from tax lawyers) would do even better. Some friends of mine are working on training models to answer questions about building / zoning / planning codes around the US.