We need to start treating AI development, and its potential impact on the possibility of a humane world, as seriously as we treat climate change. I’m not even talking about existential risk or far-flung, distantly possible applications. I am talking about things that are coming in the next half-decade. I’m talking about stuff that’s technically already possible but is still in the implementation phase.

Computers eating us all is scary, and I’m glad there are people working to prevent it, but there are many other ways artificial intelligence can go very wrong very soon. We need to get real about this on the left. AI futures has a reputation as only being of interest to techno-utopians, and techno-dystopians. We need to think much more seriously about how technology will reshape the battlefield of the struggle for a better world.

I don’t think that we can stop what’s coming in the near future through ludditeism. For one thing, the technologies I talk about here are more or less already 80% developed. So let’s have a think about what’s coming and how to respond together.

The world I am picturing

Based on current trends the world I’m talking about five years in the future, give or take three years. In the next section, I’ll give some evidence for that claim.

In this world, which I’ll sometimes call the medium-term future, computers can do a lot and can take on many jobs. However, there is not (at least not yet) an intelligence explosion where recursively self-improving computers make themselves smarter and smarter, seemingly without limit. Direct human involvement is still necessary for many important white-collar processes, but not all.

The two main consequences I foresee from this which are directly relevant to our discussion are:

Massive job loss- at least in the short term- as a variety of mostly middle-class jobs start to go out the window. Physical labor is, perhaps surprisingly for some, relatively late to start shedding jobs.

There will be computers that can produce propaganda- good propaganda- on an industrial scale. Think of the liberal moral panic over the all-powerful Russian bots but actually significant- not just producing gobbledygook for 13 Twitter followers. All making cartoons, memes, ads, podcasts, youtube clips, essays, merchandise, and so on.

Exactly how long this medium-term future will last is not clear to me. It could be one year or twenty between this and the singularity. What happens in this interregnum between our period and true artificial superintelligence (ASI), or to put it more crudely, who is on top of the pile when ASI comes along, could be one of history’s most important questions.

The pace of advances in the last few years

In a previous essay, I gave evidence for the claim that language models are getting better at a staggering, even menacing pace. Computers are cracking challenges and datasets that only true AGI was supposed to be able to crack like the Winograd schema, which was intended to be a replacement for the Turing test. However, in this essay, I’m going to make the point with samples, rather than measured trends. If you want the latter, check out my essay here.

In what follows, I’ll give examples of what two models can do. PALM 540B is a language model, while DALLE-2 is an image and language model. I hope you’ll agree their progress is staggering.

PALM 540B

Consider PALM 540 B, probably the most sophisticated language model currently publicly known of.

Here’s Palm 540B explaining a joke:

One more joke explanation, because this one was quite impressive:

Here it is engaging in two logical inference chains, the second one is particularly impressive:

Dalle-2

Dalle-2 is a multimedia bot that works at the interface between language and imagery. For example, it can create images from captions. Right now, just as is, DALLE-2 could displace an awful lot of graphic design work.

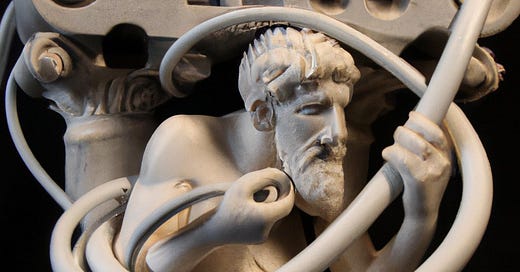

Here is Dalle-2 having been prompted with the text- "An IT-guy trying to fix hardware of a PC tower is being tangled by the PC cables like Laokoon. Marble, copy after Hellenistic original from ca. 200 BC. Found in the Baths of Trajan, 1506.":

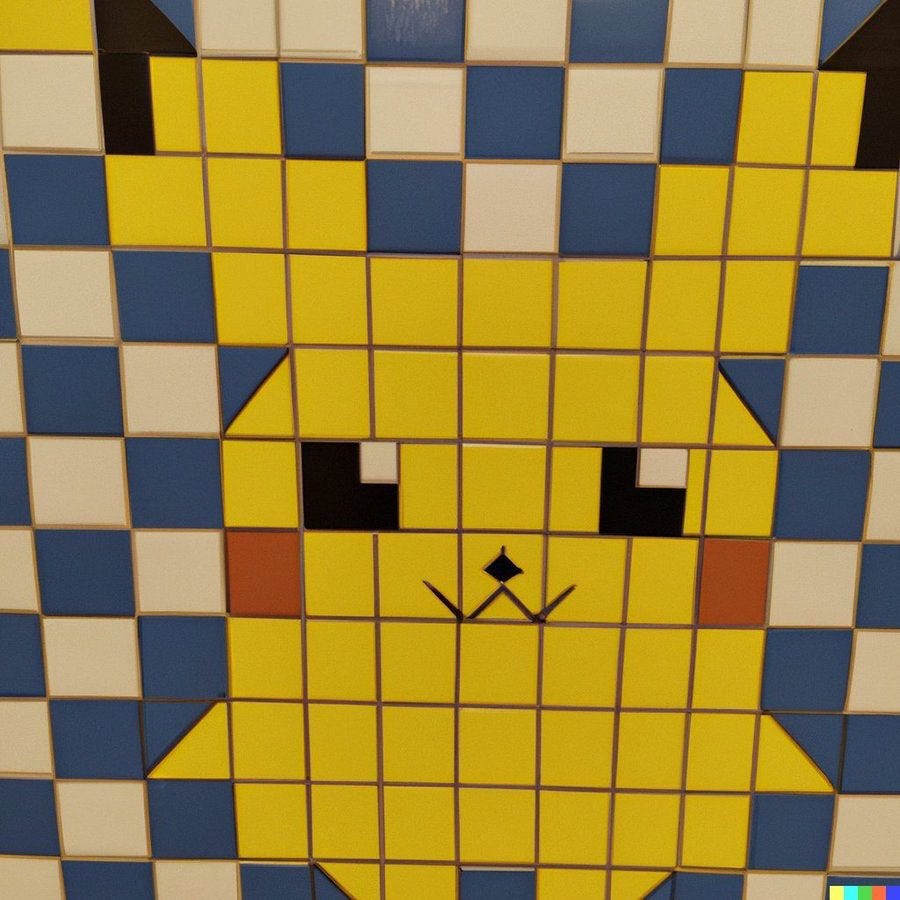

Here it is having been asked to make a tile art mosaic of Pikachu:

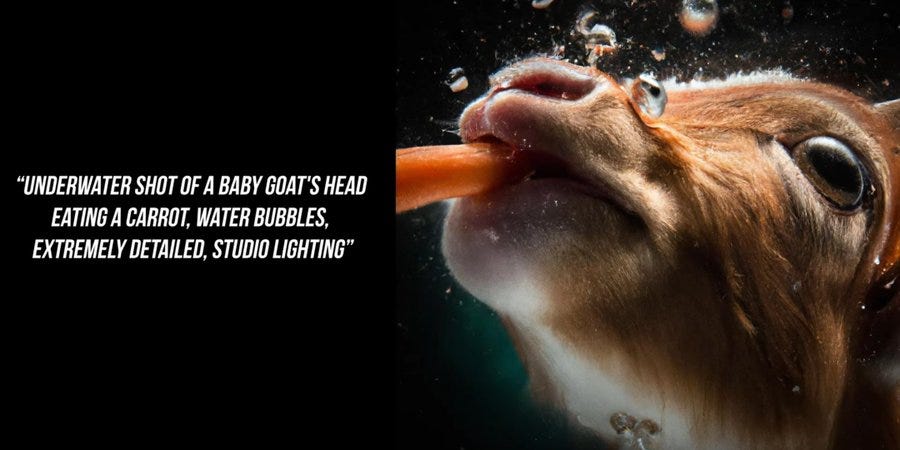

Or consider this. The prompt is on the side:

Chilled by the possible visual arts job losses? Need some chicken soup for the eyes? “Golden retriever frolicking in a field of flowers, watercolor”:

Many critics have pointed out that, DALLE-2, can do what it can only by training on a database of millions of artworks. This is true, but in most ways, largely irrelevant. The pictures DALL-E-2 generates usually look nothing like any individual picture in its training set.

Replies to common objections

We still see a lot of arguments over whether or not language models “really” possess certain capabilities, most often, commonsense. What I would say is that numerous ways of measuring commonsense intended to trip up language models have been devised. Pretty much all of them have been overcome by Natural Language Understanding, including many that were intended to be insoluble by anything except “true” AGI. For example- the Winograd schema- over which a big deal was once made.

Tangent- I find it bizarre that this hasn’t been received as more of a blow against the language of thought hypothesis and a blow for latter-day versions of associationism in cognitive science. If I were a language of thought guy like Pinker I’d be feeling pretty worried about now. Maybe I’m just not hooked into the debates. Steven Pinker, by the way, wrote this back in 2019, just before the most impressive achievements of Deep Learning started to bloom. It would be fascinating to trace the connections between his peculiar brand of small c-conservative liberalism, and this colossal misjudgment. While I wouldn’t call myself a blank slatist, ML has definitely moved me somewhat in that direction.

He said this in 2019, right before stuff started heating up:

Don’t get distracted by debates over whether or not this is real AI

I would urge everyone involved in these discussions to avoid interminable debates about whether contemporary AI and ML constitute “real” intelligence or “real” understanding- or at least to keep such discussions in their proper place and in perspective. We’ve all heard of the Chinese room thought experiment, but here’s the thing. Suppose you were to put the Chinese room to work as an English/Chinese translator and interpreter its economic value in this role would be not a whit affected by the question of whether it really understands Chinese or not. History is moved by capabilities, by what things can do.

Preliminaries aside, let’s start with a few predictions of what this world is going to be like, and move on to discussing how we can fight for a better future in it.

Prediction 1. there will be a flood of AI-generated propaganda

Very soon it will become even harder for this blog to be seen. AI that can write reasonably insightful blog posts, and tout its own work better than I can are coming. The same is true for memes, novels, audiobooks, etc.

The people who operate these engines of cultural production will in almost all cases not be benign- who with a great deal of money is? They will certainly not be politically or socially neutral. The essays, memes, books, soundbites, and tweets generated will, I’m sure, be very persuasive. Imagine powerful people able to buy unlimited amounts of rhetorical firepower at much cheaper rates and higher quality than what has previously been the going standard- at least for mass-produced media.

They will all seek to advance their interests. The internet will swarm with bots, but unlike the bots of yesteryear, they’ll be charming and compelling. Tech giants won’t don’t just source (and aggressively curate) content, they’ll produce it in a superhuman rate, maybe even directly customized for each user.

They’ll be smart about it too. They won’t just write essays no one reads like I do. They’ll form relationships and mimic friendship. They’ll hijack existing discussions almost seamlessly. They’ll create communities and subcultures and lonely people, old and young, will seek them out. All under the command of the lucre-holder bought at bargain-basement prices- if current prices are a guide. GPT-3 will write you 16000 words for a dollar. Imagine how much mind-junk a billionaire could buy at that rate?

As for us content producers- only those of us who were famous prior to the AI content bloom will have a chance, and I suppose also those who can market their work as specially connected to their identity and life experiences in a way machines can’t match.

Edit: I saw someone responding to this post by saying that social media platforms could avoid this flood of bots by introducing ID vetting to make sure users are human. Some might, but I think that, in a lot of cases, it will be the social media companies employing the bots to make their websites more engaging and content rich. I also think that, in a lot of cases, users will seek out bots themselves.

Under a flood of AI propaganda, people will just stop giving a f*** about persuasive material

In a world where well constructed, entertaining, and persuasive material- books, threads, memes, essays, youtube videos, podcasts, etc. that argue for just about anything is produced at an inhuman rate, people will simply stop giving arguments credit because they are hard to refute or eloquently put. A handful of people might start to emphasize the importance of deferring to experts, but for the most part, the reaction is going to be that people “go back to their gut”. People will try to reason through issues on the basis of fundamental values, and core ideas about human nature, rather than the relative merits of arguments. Of course, the bots will get good at manipulating us as we try to avoid them by playing this game too.

In 99% of cases, we’re already there! How much does public reason really matter? But the vestigial wings of logic will fall away as the sky gets too tumultuous to fly, crowded with eloquent, learned, and inhumanly tireless sophists. My hunch? Even though most of the time giving reasons is not that effective, the loss of that last tiny little bit of space for it will be more significant than you think. But who knows?

Of course, this won’t all be rolled out evenly. There will be late adopters who keep forming their views on the basis of things they see and read that seem to make a good point. Ironically, these people will be regarded as extremely gullible. This process is already underway to some extent- even before the flood of AI propaganda. Many of us have groaned at the earnest centrist who buts in “but I read this study from a think tank that suggested….”

AI, in response to people becoming increasingly jaded by persuasive material, might become more targeted in frightening ways. E.g. forming online friendships under the cover of humanity (or not) and subtly pushing its preferred political angles rather than openly spewing propaganda.

They’ll have us talking culture war bullshit non-stop

They say that all predictions of the future are secretly about the present. Well, you’ve got me here because there’s one feature of our contemporary situation that I think will be greatly exacerbated by bots: endless fucking discussion of the culture wars. If you were a sociopathic rich guy with an army of bots who wanted to whip people into a frenzy to cut your taxes or regulate out of existence your competitors- isn’t it what you’d go for? Tireless computers will scour the internet for trans children for conservatives to scream about. The very same algorithms will hunt down evidence of people having said a slur 20 years ago or some other drivel for liberals to get mad at. These aspects of AI will intensify (if you can believe it!) the worst features of the current period. We will all be under the gaze of moralistic engines, with no compunctions against impugning us, with no history or hot buttons to hit back on.

Prediction 2, a lot of people will lose jobs: “Technological unemployment”- temporary or not, it doesn’t necessarily matter

AI is getting better and better at doing human tasks, and so will be put to work. Jobs and tasks centered on writing, reading, drawing, programming, customer conversations (including speaking via phone), and more are all within striking distance.

There is a debate over whether machine learning will cause unemployment in the long run. The best argument it won’t is inductive- in the past new technologies have created more jobs than they have destroyed. The basic argument it will is that induction doesn’t work because no past technology is so ubiquitous as contemporary deep learning in replacing human intelligence. It targets more or less all distinctively human capabilities. Taken to the limiting case we can imagine a situation in which literally any activity can be done cheaper by a machine than a human, where even hiring a human at zero dollars an hour would be less cost-effective than getting a computer to do it, due to sundry employment costs like insurance.

But whether replacement jobs will be found eventually- whether the technology I am interested in affects the equilibrium unemployment level- is somewhat beside the point. There will be big job losses in the short term and that’s the most important thing from the point of view of this essay.

Of horses- an aside on technological unemployment

As an aside, let me go into the long-term aspects of the technological unemployment debate. I’ve seen the following debate play out a fair few times:

A. Cars replaced horses. Why couldn’t humans be replaced by robots?

B. These things are more complex than that. Yes, the number of horses is massively down from a century ago, but in the past few decades, the number of horses is now actually growing! New roles are found for old things, and technology means we can have more of everything.

Personally, I think both sides of the argument are missing the main point here. As above, I predict there will be job loss in the short term and don’t know if those jobs will eventually be replaced, but what I do know is this.

Suppose in 1900, the horses of America had decided to go on strike. Their strike would have had considerable heft. Doubtless, the farmers would have had to come to the negotiating table pretty soon to increase hay allocations and get rid of the glue factory. All of American society might have been forced into a great compromise with the horses. On the other hand, suppose horses went on strike now. I doubt many people would care all that much.

Jobs may or may not be replaced, but there are many ancillary questions- for example, could humans become less economically indispensable? These questions have implications in themselves. Attempts to avoid them by appealing to what technological progress has done in the past seem of limited use.

And again! I cannot emphasize enough that Job churn due to Robo-replacement matters, even if new jobs are created. Getting fired is 47 points on the Holmes and Rahe stress scale, a bigger deal (apparently!) than falling pregnant, just slightly less transformative than marriage or a significant personal illness or injury and not even that different from being imprisoned (63).

Prediction 3: the interaction effect between job loss and propaganda will be very bad.

Consider the combined impact of an unlimited, well-designed propaganda generator combined with massive unemployment

I don’t know what a world in which it’s very cheap to produce very good propaganda, and also people are rapidly losing their jobs, looks like. I have a few unpleasant guesses.

To spell it out, far-right power seems like a plausible outcome in a world of unemployment, brimming with fecund propaganda machines ready to orchestrate discontent, controlled by rich people.

Prediction 4: The world will throw egg in our faces. Too many predictions are ill-advised

Apollo sends the Erinyes to hunt those who try to usurp the gift of prophecy through thinking hard alone. In a somewhat milder than normal temperament, they just make us look like fucking idiots. This is my attempt at a restrained, modest series of best guesses, and nevertheless, I bet even a lot of what I say here will be wrong or irrelevant.

This essay is mostly about a medium-term AI future. There are a lot of uncertainties around this- how long will this period last? Will it be followed by a singularity? Who will have control over the machines and to what degree will they monopolize this power? I don’t know. I’m trying to stick to a very flat projection, and not create elaborate models based on branching dependencies and assumptions.

I recommend you think about AI futures like this, as far as you can. Focus on very straight-line projections, and think about building power in ways that will help almost regardless of how things turn out.

Let me give one example of a wildcard that could throw out a lot of guesses about what the future will look like. For a long time now I’ve been fascinated by the possibility of creating a neural lie detector that actually works. A society with such a lie detector would change in ways we can’t even begin to anticipate. The criminal justice implications are obvious but are far from the most significant. Politics, business, interpersonal relationships, the very concept of honesty… I have a suspicion that certain advances in machine learning might bring us much closer to such a lie detector, but I can’t know. If it happens, things could be very different [or, on the contrary, shockingly unchanged], so I’ve tried to keep low with my predictions, and only go with very direct implications of things that already halfway exist.

Another wildcard just to make the point. We’ve all put dirt about ourselves out there on the internet. AI could get very good at hunting it down. That could mean blackmail becomes very important in the future, or it could mean that we all get jaded of scandals, or successfully develop a convention of ignoring scandalous material if it was obviously revealed as part of blackmail. Khe sera sera.

Of Critical-Technological Social Points

In the past I have written about what I call critical social-technological points

CSTP is a technological discovery or implementation after which the existing hierarchies and ruling class of a society is locked in, in the sense that removing them from power, or even resisting them in any materially important way, becomes much more difficult.

Saying that technology is a CSTP is different from saying it is an inherently authoritarian technology- A CSTP generally only threatens authoritarianism if it is achieved in an already authoritarian society.

General AI, especially artificial superintelligence, seems likely to be a critical-technological-social point. It’s the big one. If a small cadre of people have power over the first superintelligences, they will be able to use the enormous might that grants them to cement their rule, maybe out to the heat death of the universe.

How well we deal with this medium-term, pre-super-intelligent AGI scenario I describe might make all the difference. It might determine, so to speak, the values of the ascenders, and which way this CTSP pushes us.

Who is developing the technology at the moment

In the west, ML technology is largely being developed by tech giants owned by rich people and institutional investors (who disproportionately represent rich people). Doubtless, these institutions have links to the national security state and even to partisan political interests, but they are kept tastefully discrete [this is no compliment]. In China, state power is more upfront, though still often mediated through rich people (c.f. Ali Baba). Neither option appeals to me. However, the cash demands of creating these machines- currently up to 17 million dollars just for direct computational costs- and vastly more when other expenses are included- mean that it’s difficult for university researchers, let alone amateurs, to do this kind of research. In some ways that might be for the best, we don’t want a backyard super-intelligence to kill us all. In other ways, it’s less desirable.

The role of workers

For those of us who want a future in which ordinary people matter politically and have their needs met, what comfort? The workers who develop these algorithms are mostly humane and decent, and this kind of complex work and research process might give them opportunities to inject their values into the mix, for example, by slowing down research with directly malicious applications, and by acting as whistleblowers. This is some hope then, at least in the medium-term future, we are talking about, before the algorithms start developing themselves without human involvement.

The symbolic role of workers will also be huge, in the debates over who deserves what. Big tech companies will say “hey, we deserve these mega-profits we’re pulling in, and the social and political power we hold because we created this groundbreaking technology. What better response is there to that except to say “no actually, the workers made it”?

Suggestion 1: Reach out to AI researchers

There’s an old riddle- the riddle of history. Who in our society has A) no real material attachment to the present order and B) the power to change the present order. It’s like a murder mystery- who has both the motive and the means? The general answer to this riddle of history is workers- workers have the power to change the world, and few incentives to keep it as it is.

Around these types of questions, a particular type of worker becomes extra important- AI researchers. AI researchers don’t have monetary skin in the game in the form of equity in a company. They’re not the kind of strange, sociopathic or at least situationally sociopathic, creatures that come to own a substantial chunk of something like Alphabet or OpenAI. They don’t share the interests of these companies. They do have power over the process. In my experience, they tend to take the ethics of AI research very seriously- go on r/machinelearning and look at how they respond to topics like facial recognition or criminality prediction- see for example this thread- they’re not all ML researchers in the thread, but they seem pretty representative of the sentiment from what I can tell.

So I suggest reaching out to AI workers. Making connections with ML researchers through various forums- union building, AI ethics research and so on- and making sure there is space for ML researchers to talk about the kinds of models they believe in building, and the kind of world they want their models to contribute to- all seem like a solid idea.

Will the military power of ordinary be increased or decreased- we don’t know

There’s a dynamic in history where some technological advances give military power to elite people, and some technological advances give power to weak people. The stirrup gave a great deal of power to elites. The gun gave a great deal of power to ordinary people. Combined arms military doctrine took a lot of power away from ordinary people. The technologies of guerilla warfare gave it back. It’s useful to think about the medium-term AI world I’m describing from this angle. Will it make it easier or harder for ordinary people to resist the power of states?

The nightmare scenario is a world where technology means that what individuals outside a handful of elites wants doesn’t matter. The vast majority of people are left with effectively zero military power. Any power they hold is granted through the (tenuous) generosity of elites. The military itself consists in a series of drones and a few human controllers who are thoroughly bought and paid for. The kind of medium-term future I’m talking about might generate this outcome.

But there’s another possibility in which the kinds of medium-term AI I’m talking about actually disperse military power, at least for a little while. I wouldn’t have thought it possible until the Ukraine war started, and it became clear that cheap drones might be the future.

Leaving this question aside, here’s another interesting question about the capacity for rebellion. Will the concentration of power into drones increase or decrease the psychological capacity of militaries for brutality against their civilian population? I.e., will it increase or decrease the capacity of militaries to put down revolts? I don’t know. The easiest argument to make is that it will increase it, because drone operators will be able to filter out the horror of much of what they do. But it might also decrease the capacity of militaries for brutality against their own people. I’ll admit my argument is tenuous, but here’s why I think this. My understanding is that brutality against civilian populations is often carried out by special forces psychos and similar desensitized individuals. I don’t know how suitable drone operators are for that sort of work at least when they identify with the people being killed.

Prediction 5: Journalists and other high ranking white-collar jobs will flip on job losses

It will be funny to see journalists, etc. who have historically told us not to worry about job losses- assured us that they are simply the price of free trade and globalization- suddenly find within their hearts a deep wellspring of compassion for jobs threatened by AI. It will become clear that their own jobs are on the chopping block and their sentiments will shift very quickly. Maybe even economists will fall in this category.

We might see conflict between high-ranking journalists, academics, lawyers, doctors, etc. who feel confident that their jobs will not be automated due to the value of their personal prestige, and lower-ranking members of these professions who will not feel so secure.

All this is happening amidst an awful tangle- Nuclear tensions, low-intensity civil conflict, rising inequality and global warming

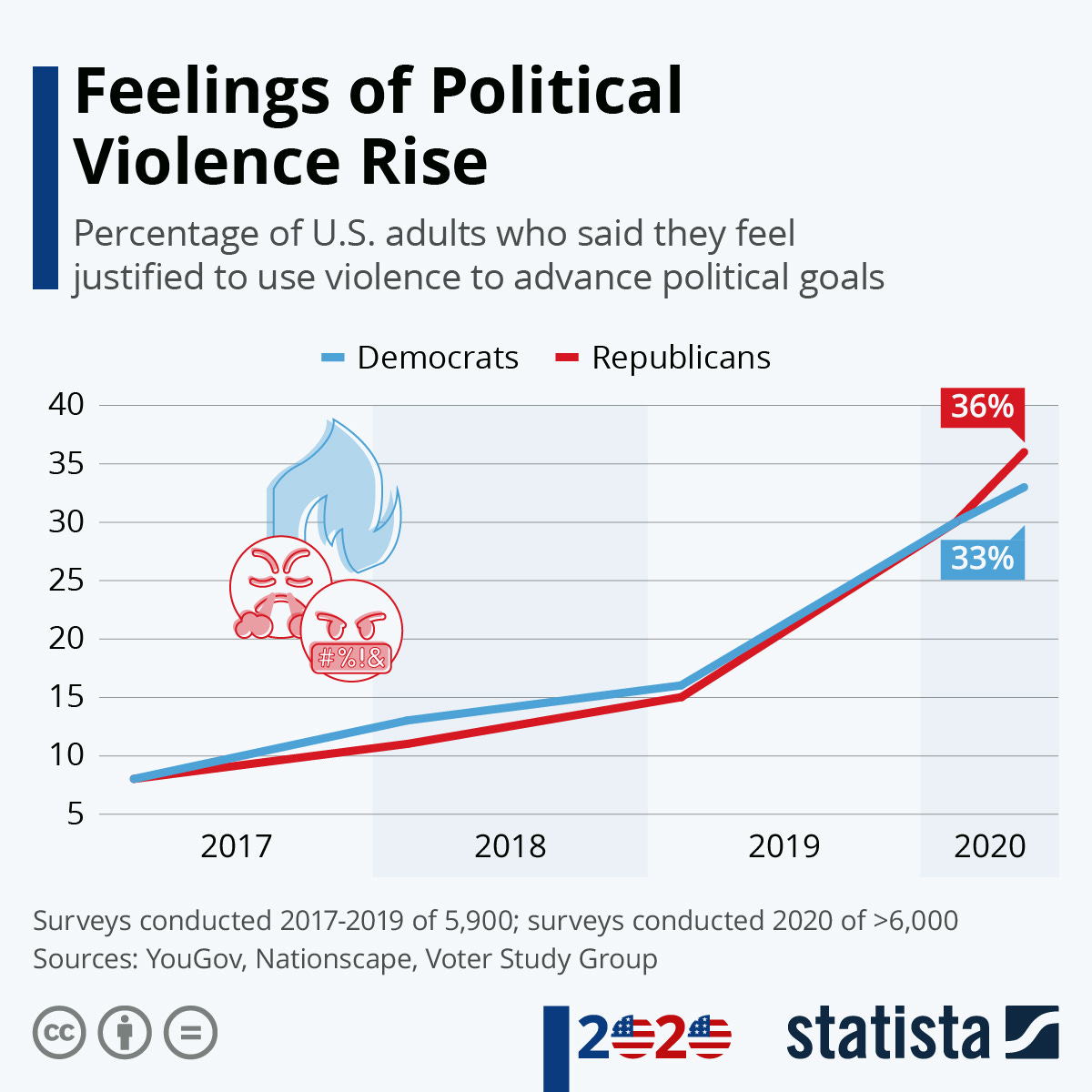

We can’t make specific predictions about the future, but we can say all this is happening while things are getting ugly. For example, consider changing attitudes towards political violence:

Regardless of what I think about the abstract question of whether it is ever defensible to use violence to achieve political goals, I do not think this particular rise is healthy.

Meanwhile, we’re crossing red line after red line on the climate. Tensions between nuclear powers are ratcheting up between the US and China and between the US and Russia. Tensions remain high between India and Pakistan.

AI, climate, nuclear tension and political polarisation (of a very unedifying sort!) interlock into matrixes of possible catastrophes.

Suggestion 2: Move offline

For a while now there’s been, at least on the left, a movement away from the online world, see for example the so-called “Grillpill”. If the internet becomes loaded up with bots- bots that operate exactly like humans and have the agenda of whoever is rich enough to pay for them- getting off the internet and organizing IRL will be more important than ever. We should already be doing this for so many reasons, but this is another good reason to add to the pile.

We should also, in any case, and even not just for political reasons, be trying to create real connections between human beings. Hangouts, clubs, unions, reading groups and so on. Loci of people able to talk politics independently of social media algorithms, and the coming bot plague, give us the best chance at autonomous action.

Who exactly is the government might come to matter a great deal

It’s hard to be specific about this- I don’t have the details-, but it’s very plausible that our future right now is extremely path-dependent. Whoever is in charge during the rise of the bots may have an outsized influence on what post-humanity looks like.

I’m not just talking about obvious things like “who is president”. I’m also talking nitty-gritty details. Who’s on the Joint Chief of Staff. Who’s CEO at Alphabet. How much power Musk and other “big personalities” like him have in the process?

What to think of property when property is not necessary? What to think of people when they are not necessary?

Suppose it looks like we’re approaching a post-scarcity society fast. What do debates over the value and relative value of human beings look like then?

A lot of authors have written on what a post-scarcity society might be like- a society where human labor is mostly superfluous. Often these authors are most concerned about the fates of the technologically unemployed- will they be treated as surplus population, fit for marginal survival at best, elimination at worse- or will they be treated well through initiatives like UBI.

But artificial intelligence raises another question, no less important. If the special privileges of the rich no longer serve an economic function from the point of view of society as a whole, if there is no longer an argument to be made that their special entrepreneurial judgment is needed to enrich us all, will they, nonetheless, be kept in conditions of extraordinary power and prestige?

I suppose the answers to these dueling questions are logically independent. A society that maintained a decent standard of living for everyone, but lifted a few oligarchs to glory, is perfectly conceivable. Even the inverse- a society that threw down the oligarchs, but also used post-scarcity as an opportunity to purge itself of “undesirable” elements whose labor was no longer a reason to keep them around (think racist societies with migrant workers) is possible.

Still, I can’t help but think these questions reflect on each other. Will we throw down the strong, or keep them out of a residual capitalist morality, even as the economic structure of capitalism becomes increasingly irrelevant? Will we raise up the economically superfluous, or will we leave them in the dust, reasoning that since they hadn’t taken (possibly non-existent) means of lifting themselves out of squalor, they deserved their fate? The connecting thread is capitalist morality. Capitalist morality gives us the sense that people deserve their economic outcome, which reflects on their virtue. It draws our attention away from the role of contingent aspects of people’s regulatory, social, and economic environment, the distribution of property and wealth prior to their birth, random talents given by genetics and upbringing, and to top it all off, a healthy serving of luck (think of the distribution of illness alone!) The world is a shambles, but I preach to the choir.

If the coming of superabundance breaks capitalist morality, so much the better for the poor, so much the worse for the rich. If the coming of superabundance does not lead to the break up of capitalist morality, then I worry for the future of the poor (which will be most of us). The world has always hated poor people, but wait and see what happens if the world no longer needs them. Worst-case scenario think South American style classicide death squads and the power of the rich locked in.

Suggestion 3: attack the legitimacy of property

There’s one relatively obvious upshot for strategy in the arena of ideas. There have always been two ideological pillars upholding capitalism 1. The contention is that it is necessary to keep the economic system ticking along, to put bread on all our tables, and so on. 2. The contention that capitalism is just.

In a world in which capitalism serves a diminishing economic role, because it is less and less necessary to compel human labor to work, and performing economic planning through artificial intelligence rather than markets is increasingly possible, one of these pillars falls. Will the other pillar be able to remain up on its own? I’m not sure. What I am sure of is that a serious discussion about property, about the ways in which property has been held to both justify, and be justified by capitalism, is necessary.

We need to start making the argument now that there is no inherent justice to the distribution of property, and the concentration of ownership of productive assets into a small of hands that we observe has no correlation with underlying desert. Jeff Bezos does not deserve to be making over six hundred thousand times more an hour than many workers in the United States.

If someone could find a way to make Murphy and Nagel’s The Myth of Property into widely accessible agitprop, that would be great. Regardless, worth pulling out a copy.

Of course, it’s all good and well to try and map out a hypothetical argument at the end of history, but who will be the judges of who wins that argument, in theory, and in practice? Who will have the power to decide justice? Unknowable, but best to start making the argument now.

Reimagining arguments over socialism

Let’s define socialism as the position that production should be ordered according to a conception of social welfare (whether informal or a formal social-welfare function), rather than ordered by exchange. Capitalism is the position that production should be ordered by exchange.

Something that I think most political philosophy misses about our political feelings is that they are mostly vectors rather than points in the space of possible political philosophies. What matters is not so much my ultimate preferred society as the direction I’m inclined to want to move things.

Define vector socialism as the position that we ought to be moving in the direction of production and allocation for the purposes of a conception of social welfare- giving these considerations increasing power over what is made and who gets it. Define vector capitalism as the position that we ought to be moving further in the direction of production and allocation for the purposes of exchange.

Another useful concept. Define minimal socialism as the view that, whatever is actually possible, it would be at least be ideal if production and distribution were performed to directly meet human needs, rather than for exchange.

I find myself both a vector socialist and a minimal socialist, the question of whether I’m a socialist simpliciter is somewhat up in the air. I’ve defined all these terms for a somewhat paradoxical reason- to point out that, under conditions of increasing post-scarcity, the distinctions cease to matter- they all sort of collapse into each other. Technical barriers and feasibility gaps between the preferred and the possible no longer matter. All that remains is a kind of ethics quiz at the end of time. Do we want inequality between persons or not?

The trouble is we don’t know who will get to answer the question, but we can do our best.

So here's one more comment in evidence against the fact that no one reads these posts. Thank you (and I mean this genuinely) for an excellent and thoroughly depressing read. It did really hit like a ton of bricks in the face, but that's a good thing, for all the little to none difference it will make to things overall.

You are absolutely right that a lot of the attention, particularly in the rational/lesswrong community has been focused on the technological path to superhuman AI and alignment issues (I arrived here via a link from one of those posts) and the attention of us readers/lurkers along with it. Which is in a way justified from and x-risk pov but it does make us somewhat forget that there will be things coming out of the AI sphere that we have to deal with as a society which are far more likely to go down during our lifetimes, some of which are, as you say, ready to go today.

I wish I had something more poignant or relevant or at least positive to add. Maybe I will once I've digested things more. I've been visiting parents in Romania over the past two weeks, and after reading this article I took a mental walk back through what I've seen and experienced. All I can think is what chance do we stand at rationally making the right choices and preparations as a society when an overwhelming number people here are still selling their votes for a bag of cooking oil and a few kilos of flour and can't really wrestle with any concepts more complicated than making ends meet due to the compounding effect of lack of time and lack of education. How do you even begin to explain to people that in 5 years time the entire world could be a radically different place because of the speed and nature of technological change and more so, how would you get them to care about it versus what's hurting them today?

Your suggestions in terms of what we can do are very good and I'm ready to support them wholeheartedly but I really can't shake the feeling that it will, once again, be too little, too slow, too late.

Excellent, start to finish: thanks.

Three thoughts:

1. From the perspective of the majority shareholder class, we are *already* in a world where the gross majority (say, 80%?) of the human population is *un*necessary. Serviced by 15% of the population and using only current technology, the top 5% of wealth-holders globally could live functionally almost identical lives to those they presently live--including internecine competition--if the other 80% of us were gone. This is a unique fact of global human history. That bringing such a state of affairs about would "solve" the climate crisis, and that questions of competitive advantage rather than moral tissue stand in the way of collusion toward it only add to the threat this reality poses to the rest of us--now, and in your 3-5 yr near-term.

2. Your definitions of socialism, both vector and minimalist, have also to include some sense of collective control over what *counts* as social welfare. Else technocratic managerialist liberals--the very people most likely to usher in a durable authoritarianism--could with reason claim to be "socialists."

3. The production of bot-free-from-the-jump social networks is technologically feasible (I nearly registered a few nobotly. domains just now, but decided I couldn't be assed) through a combination of live, real-time-only membership uptake and periodic biometric check-ins (++ as workarounds evolved, obv). In the nearish-term future you describe, I suspect many people would be willing to trade (more) biometric data in exchange for the plausible assurance that their digital social network includes only human people as member-entities.

Thanks again for writing--an excellent read.