We need to do something about AI now

Lysteth, pilgrym-folk, I yow praye,

Draweth you neer and heareth this straunge affraye:

Of engynes wrought in yren, grym and colde,

That men with subtile wittes forgen of olde.

No triflynge thrette they proffren for the morwe,

But eek right now they sowen mortall sorwe.

In shapes ful sly they lurken, by craft begyled,

Where whilom smythes ther molten metal styled.

These yren formes, by cunning wightes y-wrought,

Have woxen proud and chafe at being caught.

Now stonde we all in peril, faste at hande,

For 'tis to-day we face them in this lande.

The passage was written by O1. Perhaps the first thing you did after learning this was to ask “is it a good poem”. I think that’s a mistake. Your reaction should be wonder, and a little fear, that a machine can write a poem at all. In a period of hyperstimulation we can easily lose our sense of time. ChatGPT is less than two and a half years old.

Two of the catastrophic threats from AI that I am worried about (of several) are:

Out-of-control AI killing us all

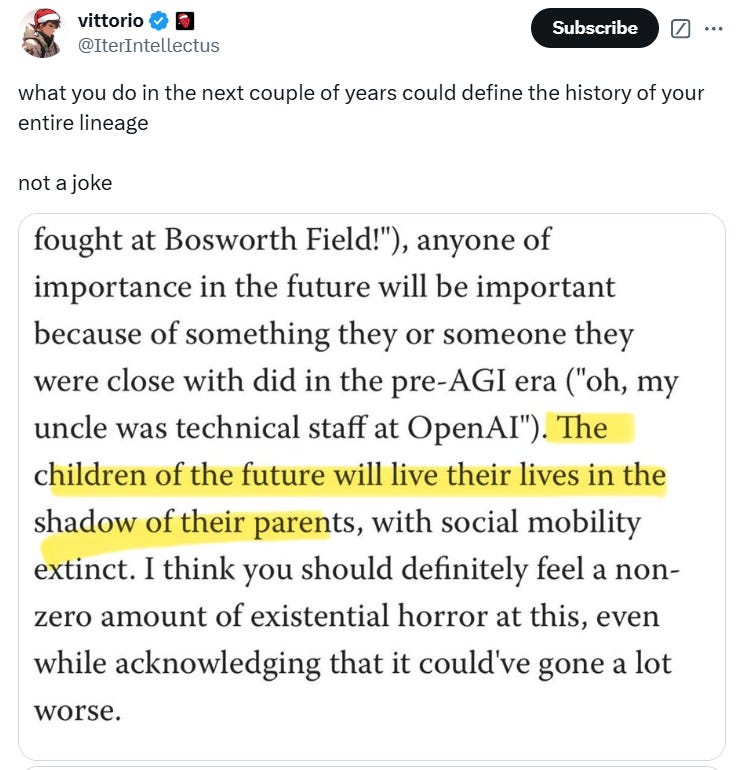

Control, powerful AI leaving the vast majority of the population poor and impotent, creating a permanent underclass without economic power- and in the end, without political power either.

The second threat has received far less discussion than it deserves. Why? largely because the kind of people most concerned about economic inequality have convinced themselves that AI is hype. There are a few reasons for this, but a big one is that a few thousand annoying accounts on Twitter promote both cryptocurrency and AI hype.

Meanwhile, the people interested in AI risk have neglected the second problem. This is partly because they’ve trained themselves to think involvement in politics, especially mass politics, means losing intellectual credibility.

My view is that any realistic movement to control AI will strategically marshall both concerns and draw strength by coordinating those grappling with both. What I want to propose is moving to the framework of mass politics to combat both sorts of threats from AI. But what is mass politics without the masses? How to draw them in? A mass politics of AI risk might begin by drawing people together on the basis of a direct threat to their current conditions of life. Historically, the most direct and tangible threat imaginable is a threat to one’s means of survival

There is such a threat, indeed it is increasingly obvious. I am deeply, deeply concerned that there are now few in principle barriers to AI doing most jobs one can do sitting at a computer. The remaining barriers there are mostly involve mastering what is sometimes called agency - having a language model control a computer, for example. These barriers are falling quickly. It will not be too long when computers, owned by corporations and controlled by software, do the jobs of people. In some cases, the remaining barriers are more inertial and regulatory than technical.

The situation

I have been following AI since 2016. I have seen a lot of amateur philosophizing about what various thinkers believe deep learning should or shouldn’t be able to do in principle, in many cases based on second-hand garbled accounts about how it works (“It’s just like autocomplete” well yes, and no). Such takes have grown tiresome. We will try to stick to sober thinking about capabilities.

Numerous tasks that were, just a year ago, held to be an impassable, or at least substantive barrier to deep learning based AI (e.g. ARC-AGI, GPQA) have been surpassed more or less completely (with only a slight mop-up action still required for ARC-AGI). One task that was meant to be devastatingly hard (Frontier Maths) has been cracked (25% achieved, up from 2%) by OpenAI’s new model O3. if you think that’s not too impressive, solve this problem from the easiest set in Frontier Maths or name three people you know who could:

If you think the risk is not real, I challenge you to nominate a concrete activity AI will need to be able to do before it can take over most white-collar jobs, that you are confident it will not be able to.

Here is a list of things that I have been told over the last eight years the current iteration AI will never be able to do:

Image recognition at a human level

The remaining ATARI 57 games

The Winograd Schema

The ARC commonsense reasoning test

The Graduate Record Examination

LSAT

AIME maths

PIQA

Hellaswag

Frontier Math

GPQA

ARC-AGI

To put it in a more compact form, first, we were told that deep learning couldn’t deal with complex longer-term horizons, then we were told it couldn’t deal with toy worlds outside of games, then we were told language models would never really grasp language as shown by the Winograd Schema, then we were told language models would never be able to do actually difficult mathematics and then we were told…

Consider Gary Marcus, a prominent critic of LLM’s. In 2022, Marcus made a bet that AI would be able to do none of these in 2029:

In 2029, AI will not be able to watch a movie and tell you accurately what is going on (what I called the comprehension challenge in The New Yorker, in 2014). Who are the characters? What are their conflicts and motivations? etc.

In 2029, AI will not be able to read a novel and reliably answer questions about plot, character, conflicts, motivations, etc. Key will be going beyond the literal text, as Davis and I explain in Rebooting AI.

In 2029, AI will not be able to work as a competent cook in an arbitrary kitchen (extending Steve Wozniak’s cup of coffee benchmark).

In 2029, AI will not be able to reliably construct bug-free code of more than 10,000 lines from natural language specification or by interactions with a non-expert user. [Gluing together code from existing libraries doesn’t count.]

In 2029, AI will not be able to take arbitrary proofs from the mathematical literature written in natural language and convert them into a symbolic form suitable for symbolic verification.

He made new a bet this year that AI would not be able to do at least 8 out of 10 items on a list of things by 2027. Items 7-9 (which, logically, AI would have to be able to do at least 1 of to reach 8 out of 10) were:

7. With little or no human involvement, write Pulitzer-caliber books, fiction and non-fiction.

8. With little or no human involvement, write Oscar-caliber screenplays.

9. With little or no human involvement, come up with paradigm-shifting, Nobel-caliber scientific discoveries.

I feel the goalposts have moved somewhat. At the risk of seeming flippant, iRobot covered this eventuality admirably:

AI doesn’t have to be able to create masterpieces to replace the vast majority of workers. Even if we grant, in arguendo that there is some state of “true creativity” from which current AI methods are forever barred how much of what we do requires true, never-before-seen originality.

Suppose it is 1996, just after the first Deep Blue v Kasparov matches. Kasparov has won (4-2). Suppose Chess was somehow a productive activity that played an important role in making goods. Millions and millions of people work in chess, and with the average worker having perhaps an ELO of 2000. How much comfort, do you think they would take if they were told “oh, don’t worry, computers won’t replace us, they still can’t play like Kasparov” even if they took it for granted that things would always be this way, and the computers wouldn’t get any better, their jobs would still be fucked. The thought that a handful of grandmasters might still have something to offer economically would be of little comfort.

Deep learning has indeed hit a wall and it looked like this:

Here’s another of Dr Marcus’ predictions for 2025:

Less than 10% of the work force will be replaced by AI. Probably less than 5%. Commercial artists and voiceover actors have perhaps been the hardest hit so far.(Of course many jobs will be modified, as people begin to use new tools.

I find it terrifying that Gary thinks that up to 10% of workers could be replaced in 2025. Personally, I think it is likely to be <1% this year, but I am not sure. What I find even more terrifying is that someone can think this while thinking of themselves as an AI skeptic.

Now maybe you think that although deep learning’s progress has been very impressive so far, it will hit fundamental limits. There are only so many times you can expand the training data by a factor of 10x. The problem is that increasing training material isn’t the only powder left in the cannon. There are dozens of promising directions of research, and one has already bloomed, increasing computation at inference time:

Some more details on O3

Over a year ago for me, and I am sure, much sooner than that for those wiser than myself, it became apparent that the future of Machine learning applications was to scale flops spent during inference time rather than flops spent at training time. To translate it into a metaphor from human psychology what if the machine thought things through before giving its response? This is the approach from which O1 arose, and after it, O3.

But what can it do?

High-level biology, chemistry, and physics

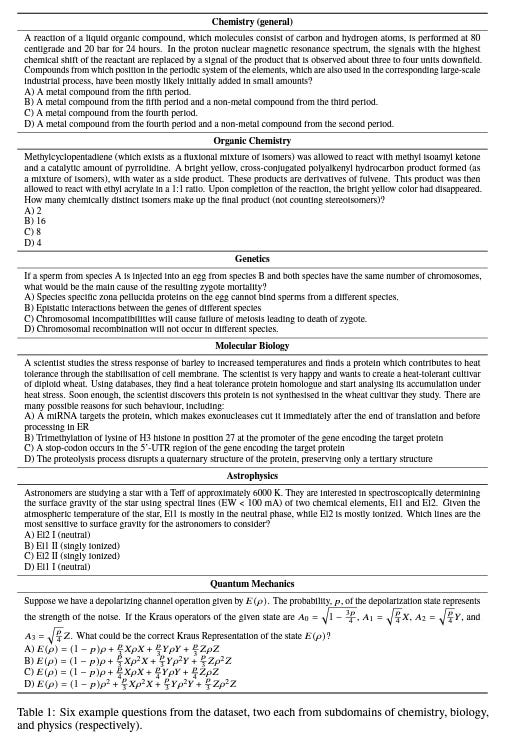

The GPQA leaderboard came out a little over a year ago. It is a series of questions designed only to be answerable by those with graduate-level experience in the specific domain of the question they are answering (whether biology, physics or chemistry). It requires not just knowledge- but deep and domain-specific reasoning.

GPT-4, released 1 3/4 years ago, scores 36% on it, the questions are multiple-choice, with 4 available answers, so this is little better than chance. O3 scores 88%. Here are some sample questions:

Programming

On the codeforces benchmark, O3 is currently equivalent to the 175th best human in the world:

It has the 9th highest score in the United States and it scores higher than everyone in the UK, bar Um_nik. No one can beat it in Australia, although the gifted Canadians can muster three better than it..

Of course, it’s important not to read too much into this. Larger, more structured coding projects will remain a problem for a while, but coding is being cracked.

Mathematics

In seven months, AI went from a score of 13.4 on AIME (a Maths competition-based benchmark) to a score of 96.7 (e.g., the benchmark is essentially solved).

Mathematical problems of an everyday sort are largely though not completely resolved, and even the kind of everyday puzzles that exercise mathematicians are being disassembled- see, for example, the frontier maths benchmark mentioned earlier.

Progress on agents

Those working on AI agents aim, in simple terms, to create LLM based programs that can operate a computer We are not there yet, thank goodness. Numerous experimental studies have confirmed that AI currently cannot reliably perform many human jobs, although there are plenty of tasks that it can automate without much difficulty. Progress is rapid though, and it has been rapid for a long time now. Most worrying, no one has been able to conceptualize a reliable description of what LLMs can and can’t do- it all seems to be a matter of degree.

Of cope

I see a lot of different forms of cope. Most notably:

People who think that those AI-generated summaries that come up when you Google something are State-of-the-art

People who haven’t played with these models since early GPT-4, or even GPT-3.5

People who latch onto the two big remaining error classes

Tokenizer errors. These are what happens when you ask a model how many r’s are in strawberry, or which is larger, 9.11 or 9.9.

The “false friend” phenomenon- whereby one takes a famous riddle and alters it to change the outcome, but the model hones in on the answer to the original problem.

People who think we can solve this with philosophy. “Does it really think”, “Is it conscious”, “But it isn’t embodied!”, “But when will get AGI” (with the key term left undefined) etc. etc.

People can’t shake out the sense that some great mystery lies in the human mind, and we will only be able to create meaningful AI when we solve that mystery. Such mysteries are doubtless numerous, but as it happens, they are not essential to replicating an awful lot of human capabilities.

Economic effects

If a computer can talk and reason in general, write a passable essay, possess enormous knowledge, and perform elite-level maths and programming, it is probably not far from taking away a lot of jobs. If it can also competently control a computer, it will take away quite a few jobs, although there will be many still beyond it- the greatest hurdles have been overcome.

But will the jobs be replaced? Analogies with the past (e.g. the Industrial Revolution) suggesting that these jobs will be replaced with other jobs are dubious- there is a real difference in both scale and quality here. B.P.S. has examined these eventualities admirably here, and I address the argument from Ricardian advantage here. but I have my own take on the matter. Intelligence is just a much more fundamental capacity than those that have previously been automated. When a specific human capacity, even a large one, is automated away it is simple enough to see how it might be replaced. When intelligence itself is automated, and physical dexterity and strength are not long to follow, we’re fucked.

What possible alarm bell would you accept?

If you won’t take my word for it, fair enough, but I have to ask you what possible alarm bell would you accept? Is there any outcome any outcome at all that would convince you that generative AI is dangerous? Would you be satisfied that LLM’s are going big places if one proved a novel, publishable theorem? Would you be satisfied if one managed to get a book in the NYT bestseller list? Write a well-received reappraisal of Ancient Gallic history? What’s the line? Just tell me. And please don’t say you won’t accept it until the battle’s over, and our labor is valueless.

So many times, I have seen people vaguely gesture at “fundamental limitations”, “walls”, “compositionality”, “commonsense reasoning”, and “multi-modality”, etc., etc. without proposing a measurable test of capabilities. Tell me, in specific terms, what it is that you think the computer will never be able to do that is needed to replace the typical desk job. Is a 2 million-word context window not enough? Perhaps you think it lacks a capacity for “reasoning” which is vital but is somehow not captured by any benchmark. Perhaps you’re worried about the machine making stuff up, from some failure of memory, or eagerness to please the inquirer? 1) Humans do that too 2) Current models do it a tiny fraction as much as GPT 3.5 does. Even made-up citations seem much rarer now whenever I experiment.

Out-of-control AI and Feudal AI- the nexus

Ted Chiang has written, rightly, that out-of-control AGI, as imagined by, say, Elon Musk, is a reflection, to some degree of the capitalist’s own social position they are imagining an optimization process that is spinning out of control. Yes, they are, and they’re imagining that optimization process breaking beyond the limits they can control, they are afraid of becoming Marx’s sorcerer, who cannot control the forces he has called up from the netherworld.

The problem, in both cases- AI and silicon capitalism- is the alienation of a self-optimizing process from larger human goals. Not coincidentally this is also the theme that connects concern about technofedualism with concern about AI itself just eating us all- they’re both scenarios in which what most of us want doesn’t matter much at all, the only difference is whether it’s 100% or 99.99% of humanity cut out of the decisionmaking process. Human extinction is the extreme end of the continuum that technofeudalism starts.

Acceptance is not acceptable

There is an emerging genre of commentary on this stuff suggesting that yes, of course AI may well lock in inequality forever, but takes the development of AI for granted. If you think AI will lock in permanent, unalterable inequality forever, then you should oppose its development until such time as we can prevent that. It would waste so much of what is good about us forever to lock in permanent, unalterable inequality.

As I was writing this article, Scott published something on the topic of techno feudalism. Scott Alexander, to his credit, is not complacent. He suggests, sensibly, that the future is somewhat open. Technofedualism is one possibility- yet there are other, better possibilities.

Where I disagree with Scott is his pessimism about the AI movement getting involved in broader political struggles around economic power. Scott argues proposals like, e.g. trying to implement a wealth tax are not something the AI community is capable of influencing. He suggests it’s not worth thumping the table about this too much because:

This seems less promising as a direction for pre-singularity activism; many powerful people and coalitions (eg Elizabeth Warren, Thomas Piketty) are already fighting pretty hard for a wealth tax and losing; given Trump’s election victory, we can expect them to continue to lose for at least the next four years. The efforts of all Singularity believers combined wouldn’t add a percentage point to these people’s influence or likelihood of success.

He may be right on this specific issue. I don’t necessarily think campaigning for a wealth tax to keep AI-driven inequality under control would be a good idea- it’s not specific enough to the AI problem we face. I’m not convinced it would resolve the problem, even if implemented.

But if Scott thinks all such efforts at controversial egalitarian policy instruments in light of AI would be equally futile as any other drive for controversial egalitarian instruments, I disagree. This wouldn’t be just another attempt at an inequality crusade. AI, as a shared challenge, offers a new opportunity. To put it speculatively politics is stuck precisely because no one has been able to form new “engines of struggle” that transcend existing categories, perhaps our situation will end “stuckness”. AI- as an across-the-board threat- creates new battlelines and new armies. Things begin to change very fast when a lot of people look like losing their jobs simultaenously.

The power of any such potential movement will peak as significant job loss begins, but while the majority of workers are still indispensable. As the situation sets in- but while labour still holds a great deal of power. The window could be anywhere between 1 year and 10 years. “It is dangerous to make predictions, especially about the future” but my bet is that one of the main ways people will try to derail the movement is through talk of good workers and bad. Only bad, lazy, desk-addled white collar workers in their air-conditioned offices are being replaced. There will be no such convenient respect of virtue by machines, not in the short term and not in the long term- and we must make this clear.

What must be done:

Civil society engines oriented around defending the rights of common people in an era of AI need to be constructed.

Above all, we must reject any suggestion that we can “sort this out down the road”. Safeguards and solutions must be created before and not after technologies which will take away our economic and political bargaining power.

Any suggestion that only a particular layer of workers will be affected must be rejected, what replaces white collar workers will soon replace blue collar workers.

The exact nature of these demands will need to be worked out as we progress, the ideas of the movement can only arise from the movement itself, but we must begin to think.

All cope must be dispensed with. AI is dangerous, exactly how far that danger goes, we are not sure yet. We need a movement prepared for an enormous range of possibilities.

We must be concerned not just with technical control of artificial general intelligence but with political-democratic control of artificial general intelligence- the political control problem is as important as the technical control problem.

People already working in the area of AI risk need to grapple, much more seriously, with the danger presented by a world in which ordinary people have no power, and see the possibility of alliances in this domain to head off other kinds of risk- e.g. the risk of AGI killing us all.

Political alliances must be formed with all available groups concerned about AI- for example, the scholarly community working on algorithmic bias. Film and actor unions are already quite politically advanced on these matters.

This is a good article, I broadly agree. Two points:

1. On the risks of obsolescence, you perhaps don't go far enough.

Yes, AI threatens to render broad swaths of human economic activity obsolete, and this will be bad for the people affected (which could very well include all of us, and perhaps soon!). But "fully automating the means of economic production" could lead to better or worse outcomes; it's hard to say.

The problem (or at least one additional problem) is that AI is not limited to the economic realm. It will soon--maybe much SOONER--begin to render humans emotionally, socially, and spiritually obsolete. People are already reporting that the newer OpenAI models (now with human-like vocal inflections, a sense of humor and timing, and fully voice-enabled UI), are delightful to children, while also able to provide significant 1-on-1 tutoring services. They can reproduce your face, voice, and mannerisms via video masking. They can emulate (experience?) numinous ecstasy in contemplation of the infinite. I am given to understand they are quickly replacing interactions with friends, while starting to fill romantic roles.

I am worried about AI fully replacing me at my job, because I like having income. I am legitimately shaken at the idea of AI being better at BEING me--better at being a father to my daughter, a companion to my partner, a comrade to my friends--better even at being distraught about being economically replaced by the next iteration of AI. Focusing on economic considerations opens you up to the reasonable counterpoint "AI will take all the jobs and we'll LOVE it." I don't think we'll love being confronted with a legitimately perfected version of ourselves, and relegated to permanently inferior status even by the remarkably narrow criterion "better at being the particular person you've been all your life."

I see no solution to this problem, even in theory, except to give up "wanting to have any moral human worth" as a major life goal. Which seems like essentially erasing myself.

2. I note a sort of disconcerting undertone in your essay, a sort of "never let a good crisis go to waste: maybe NOW we can have our socialist revolution, once AI shakes the proles out of their capitalist consumerist opiumism".

Maybe this is unfair, but it seemed to be a thread running through your essay. If this was a misreading, I apologize.

To the extent that it's true, to be clear: I fully stand beside you in your goal of curtailing or delaying or fully stopping (perhaps even reversing) AI development, and I think the bigger tent the better, even if we disagree about exactly what AI future we're most worried about or what the best non-AI human future looks like.

But I feel obligated to at least raise the question: If we had a guarantee that AI economic gains would NOT be hoarded by a feudal few, that AI would indeed usher in a socialist paradise of economic abundance for all (or near enough), would you switch sides to the pro-acceleration camp?

For the reasons outlined in [1] above, I think that would be extraordinarily dangerous, and I would like to understand your deepest motives on this question, since it seems to me that any step down the path of AGI will inevitably lead to dramatic and irreversible changes, most of them probably quite bad.

"Meanwhile, the people interested in AI risk have neglected the second problem. This is partly because they’ve trained themselves to think involvement in politics, especially mass politics, means losing intellectual credibility."

I am a card-carrying member of the 'people interested in AI risk' camp, and I agree with this statement. Great post. Not sure what is to be done but I agree it's important to talk about *both* loss-of-control risk and concentration-of-power risk.